AI agents are changing what “good traffic” and “bad traffic” mean.

Some automated sessions are legitimate customers delegating work to an agentic browser or consumer AI agent. Others are stealth automation designed to scrape, abuse, fraud, or hijack intent inside authenticated journeys. The operational question is no longer “bot or not?” It’s “what do we trust, for which actions, and under what conditions?”

That framing is exactly why Forrester introduced a new software landscape: The Bot And Agent Trust Management Software Landscape, Q4 2025—an overview of 19 vendors included in the research. HUMAN is proud to be included.

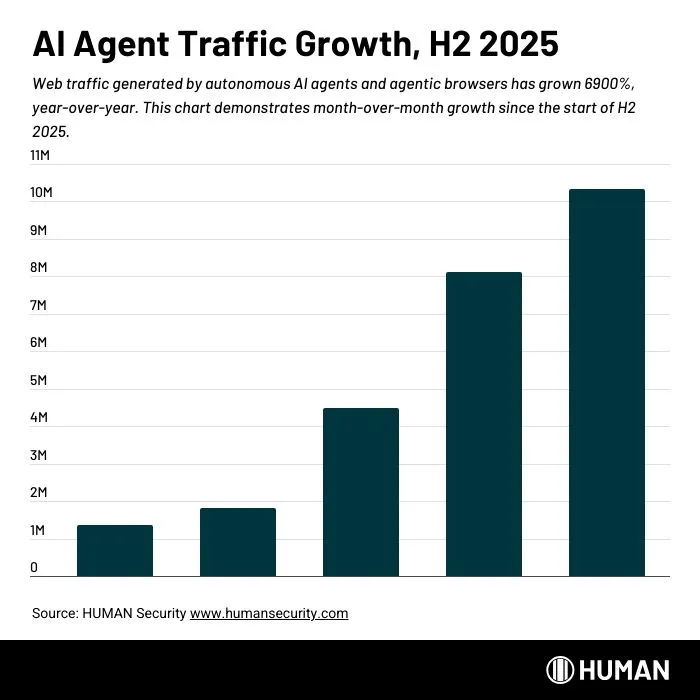

This is a critical shift. At HUMAN, we’ve tracked a 6900% growth in agentic traffic volume throughout 2025. Long before ‘bot and agent trust management’ became a category label, we built AgenticTrust to solve the underlying problem: governing automated actors acting on behalf of real users. We’re excited to see such swift analyst recognition of these market changes.

What is Bot and Agent Trust Management?

Forrester defines Bot and Agent Trust Management as:

“Software that identifies and analyzes the intent of automated traffic directed at an application, establishing ongoing trusted relationships with good bots and AI agents and rejecting and misdirecting malicious bots and AI agents, to protect legitimate customer business while also increasing attacker costs.”

In plain terms: this is the discipline of identifying automated traffic, determining its intent, deciding how much to trust it, and enforcing that decision continuously, so you can enable legitimate bots and agents while stopping abuse.

The market definition emphasizes ongoing trust relationships with “good bots and AI agents,” while rejecting and misdirecting malicious automation.

That “ongoing” part matters. This means static allowlists and brittle fingerprints aren’t a strategy for an internet where agents can be spoofed or hijacked, and the same agent identity can behave differently depending on the human behind it.

From Security-First to Trust-First

Forrester puts the market disruption succinctly: “The rise of AI agents moves the market from security first to trust first.”

AI agents make the old “automation or human” binary obsolete because many human users will choose to use agents to interact with applications. In a trust-first model, firms must establish trusted relationships that identify and enable customers regardless of how they interact with the site, and vendors must break out of the security silo to maintain trust across the customer journey.

Practically, that means:

Agentic Traffic is Growing Quickly

Automation isn’t new. But automation that increasingly represents real customers at scale is.

In our telemetry, we’re seeing exactly how quickly agentic automation is showing up in high-intent customer journeys, and how quickly that behavior is moving toward conversion.

Looking at AI agent traffic from Black Friday to Cyber Monday, we observed:

*Note: This telemetry reflects trends across HUMAN-verified interactions, not an absolute measure of all AI agent activity or market share.

HUMAN’s Playbook for Bot and Agent Trust Management

A trust program that works in the agentic era typically has five capabilities. If a vendor can’t support these, you’re buying tooling, rather than the operating model you actually need.

1) Visibility at the Actor Level, not just at the Request Level

If you can’t answer “who (or what) is doing this, across sessions, across endpoints,” you’re stuck playing whack-a-mole with rules.

With AgenticTrust, our design premise is visibility first: seeing how autonomous consumer AI agents behave across the customer journey, from browsing to checkout, and understanding intent.

2) Verifiable Identity, not Spoofable Identifiers

User-agent strings, IPs, and API keys are useful, but they’re spoofable and frequently abused.

AgenticTrust supports cryptographic verification of autonomous agents using digital signatures that cannot be spoofed, enabling an evidence-based trust decision rather than a branding-based one. Major AI developers, including OpenAI and AWS, recognize HUMAN as a trusted solution to verify AI agent traffic.

We also released HUMAN Verified AI Agent as an open-source demonstration of cryptographically authenticated communication using HTTP Message Signatures (RFC 9421), with agent naming aligned to OWASP’s Agent Name Service (ANS). As new agents are built, AI developers can verify their agents cryptographically to enable trusted interactions across the ecosystem.

This matters because “trust” without a verified foundation turns into a marketing claim.

3) Governance Controls that Shape Behavior, not just Block Traffic

If your only enforcement action is “block” or “allow” at the agent level, you’ll either break legitimate experiences or allow too much abuse to preserve user experience.

AgenticTrust is built for guardrails. Customers can set granular action-based rules for each agent, allowing one to freely browse but not create an account or check out, while choosing a different set of guardrails for another agent. By governing how consumer agents interact with your site, you can enable agentic commerce without absorbing uncontrolled risk.

4) Coverage Across the Full Customer Journey

AI agents are a continuation of a long list of automated threats. The interesting thing about today’s agents is not that they introduce entirely new or more sophisticated threats, but that they both lower the barrier to entry for fraud and enable unprecedented speed and scale.

Today, AI is just another tool in fraudsters’ toolkits, in addition to bots and manual fraud. Bad actors use a combination of these tactics to carry out fraud and abuse across the customer journey. Their attacks hit:

HUMAN’s application protection suite is organized around customer-journey outcomes—like bot detection and mitigation, account takeover prevention, and scraping protection—so teams can address abuse patterns end to end across sessions, rather than with isolated point tools at disparate interaction points.

5) A Real Adversary-Cost Function: Disrupting Operations, not just Requests

If you assume “better detection” ends the story, you’re assuming attackers don’t adapt.

They do. Which is why threat intelligence and takedown capability matter in this category.

HUMAN’s Satori Threat Intelligence and Research Team focuses on discovering, analyzing, and disrupting threats to drive actionable insights and collaborative takedowns of cybercrime. Our detection and threat research experts are constantly working to discover new detection signals, de-obfuscate stealthy agents, and find (and mitigate) new threats.

In a trust-first world, defense isn’t just classification; it’s pressure on the attacker’s economics.

How HUMAN Led in 2025: Building the Trust Layer for Bots and AI Agents

If your definition of “leader” is “most aggressive marketing,” you’ll get the wrong vendor.

A defensible definition is:

That’s what HUMAN built in 2025, and it’s why we invested early in:

HUMAN’s approach isn’t bolting half-baked features on bot detection. It’s extending a trust model that’s already built to protect digital journeys from fraud and abuse into an internet where AI agents increasingly mediate those journeys.

Here’s what HUMAN shipped this year and what we’re doing differently:

Visibility, Governance, and Control across Bots, Humans, and AI Agents

Verified Identity for AI Agents

Control, Governance, and Monetization for LLM Scraping

Partnerships that Connect Trust Decisions to Business Outcomes

Open standards leadership to help create enforceable trust

The Next Steps to Safely Enabling Agentic Commerce

The shift to bot and agent trust management is not a branding exercise. It is an operating requirement for digital commerce as AI agents become a mainstream customer interface. The winners will be the organizations that can distinguish legitimate agentic customers from abuse, verify agent identity when possible, and govern what automated actors are allowed to do, all without degrading conversion.

If you’re facing scraping pressure, rising takeover attempts, or rapidly growing agentic traffic, the question is no longer “bot or not?” It is “what do we trust, for which actions, and under what conditions?” HUMAN helps you answer that with measurable controls that preserve customer experience and increase attacker costs.

If you’re ready to get visibility and control over AI agents and agentic browsers today, book a demo to see how HUMAN can help. If you’re just starting your journey, check out these resources:

FAQ

What is bot and agent trust management?

Bot and agent trust management is software that identifies and analyzes the intent of automated traffic, establishes ongoing trusted relationships with good bots and AI agents, and rejects or misdirects malicious bots and agents.

Why is the bot management market is shifting from “security-first” to “trust-first”?

Because AI agents blur the “automation vs. human” binary: many human users will choose to use agents to interact with applications, and blocking too much automation can block customers and send them elsewhere.

How fast is agentic traffic growing, and why does that matter for commerce?

In HUMAN’s telemetry, agentic traffic grew 6,900% year over year, with meaningful movement toward conversion behaviors (including increased payment and checkout attempts) during Black Friday–Cyber Monday. This indicates agents are not just crawling; they’re participating in high-intent customer journeys.

What’s the practical difference between “allow/block” and trust-first controls?

In a trust-first model, enforcement expands beyond binary allow/block to action-level governance: you may allow an agent to browse and search, while preventing account creation or checkout, based on identity, intent, and context.

How does cryptographic verification of AI agents work, and why is it important?

Cryptographic verification uses digital signatures to prove an agent’s identity in a way that is materially harder to spoof than user-agent strings, IPs, or API keys. The blog positions AgenticTrust as supporting signature-based verification for evidence-based trust decisions and references HUMAN Verified AI Agent (RFC 9421) aligned to OWASP’s Agent Name Service.

What capabilities should buyers look for in bot and agent trust management?

The blog’s “playbook” recommends five capabilities: actor-level visibility, verifiable identity, governance controls that shape behavior (not just block), coverage across the full customer journey (login, APIs, scraping, checkout, promo), and a real adversary-cost function via threat intelligence and disruption.

*Forrester Research, Inc., The Bot And Agent Trust Management Software Landscape, Q4 2025, by Sandy Carielli with Amy DeMartine, Katie Vincent, and Peter Harrison, December 18, 2025.

Forrester does not endorse any company, product, brand, or service included in its research publications and does not advise any person to select the products or services of any company or brand based on the ratings included in such publications. Information is based on the best available resources. Opinions reflect judgment at the time and are subject to change. For more information, read about Forrester’s objectivity here.