Editors’ note: In this series, “Unmasking Malvertising,” we explore various ways in which malvertising is executed to help us collectively see the digital landscape not just as defenders, but through the eyes of those who do it. In this installment, we explore “cloaking” or how malvertisers create ads with split personalities, appearing harmless to security systems while targeting visitors with malicious content.

Like Dr. Jekyll’s respectable facade concealing Mr. Hyde’s monstrous nature, cloaking is a malicious behavior where ads present a harmless face to security systems while harboring a malicious alter ego that emerges only when the right circumstances are present.

Understanding this deception and the dual nature of these Jekyll and Hyde creatives is crucial for building the adaptive protection needed to stay ahead of these evolving threats.

Digital Jekyll and Hyde: The Nature of Cloaking

In malvertising, cloaking is the concept of hiding the true nature of an advertisement to avoid detection. Bad actors cloak or disguise the malicious image or landing page of an ad by swapping in innocuous versions of these pieces of the creative. The bad actor chooses when to display the innocuous version and when to deliver the malicious, often clickbait image, or the malicious landing page.

Think of it like a billboard that displays family-friendly content when advertising inspectors drive by but shows inappropriate material to regular traffic. This sneaky swapping serves a specific purpose: to avoid having malware campaigns caught and blocked, or entire operations shut down.

The Evolution of Cloaking Tactics

Early cloaking methods relied on simple timing rather than technical sophistication. Bad actors would submit innocent-looking creatives for platform review, wait for approval, then replace the approved content with malicious payloads. This straightforward bait-and-switch approach worked because most platforms had limited monitoring capabilities and checked the ads only once.

As ad platforms began losing valuable business relationships due to malware incidents, they were forced to become more sophisticated, implementing manual creative reviews and building alerts for when creative assets were swapped directly or detected as new.

In response to this evolving threat landscape, HUMAN enhanced our malvertising defense technology to better protect publisher and platform partners from these scams, detecting and blocking cloaking attacks used to deliver malware at scale.

Today’s bad actors have dramatically evolved their tactics, developing far more sophisticated cloaking methods that go well beyond simple timing-based swaps. This evolution has transformed cloaking from crude manual processes into automated, intelligent deception systems that can adapt to different environments and detection methods.

A Cloaking Attack in Action

To understand how modern cloaking works in practice, let’s examine a typical attack scenario. Unlike the simple swap methods of the past, today’s cloaking attacks use environmental detection to serve completely different experiences based on who’s viewing the ad.

Here’s how a modern cloaking attack unfolds:

The Anatomy of Cloaked Content

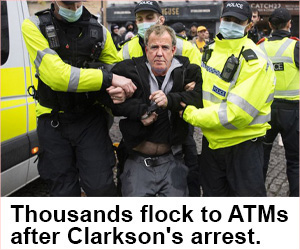

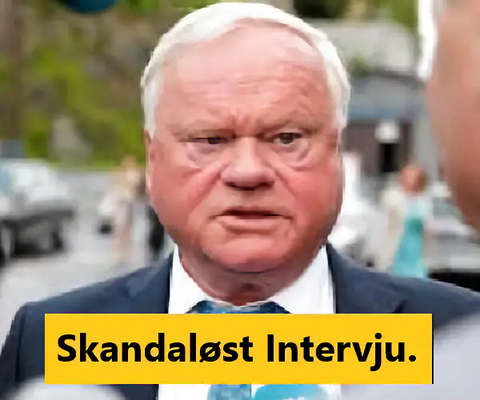

The celebrity clickbait scenario described above illustrates one prevalent method, with content that follows predictable patterns revealing the calculated strategies bad actors employ. These malicious creatives typically feature:

Cloaking isn’t only confined to the ad creatives. Bad actors utilize it within the landing page environments as well. Visitors or reviewers encounter what we call “shield pages”—innocuous pages that appear legitimate while concealing the actual malicious destination.

For example, clicking a celebrity scandal ad might first land visitors on a generic news-style page before automatically redirecting to the cryptocurrency scam. This multi-layer approach makes detection even more difficult, as the initial landing page appears completely benign to security scanners while the real malicious activity happens steps later in the user journey.

Examples of malicious ads

| Shield Creative | Malicious Creative | Shield Page | Malicious Lander |

How Cloaking Works: The Technical Mechanics of Deception

There’s a spectrum of complexity to how cloaking actually works. Let’s start with an example from the simpler side of the spectrum, in which assets are swapped on the backend after a creative has gotten live, and after getting through onboarding processes.

Moving toward the technical side of the spectrum, defenders start to observe sample rate usage: this can be as simple as a random number generator that shows the malicious asset on a small percentage of sessions, with the rest of the traffic cloaked with “safe” assets.

Then on the farthest end of the technical spectrum, is on-page or server-side fingerprinting using real-time analysis of a user device, browser, or country to determine in real time which creative they want to show.

Why Traditional Defenses Fall Short Against Cloaking

The fundamental challenge with cloaking lies in its exploitation of how traditional security systems operate. Cloaking attacks specifically target the gaps and assumptions built into conventional detection methods, making them particularly difficult for standard security approaches to identify and stop.

Traditional security approaches that interrogate creatives within sandboxes cannot detect what only activates under specific real-time conditions. These sandbox environments emulate real-world environments with controlled and predictable settings that malvertisers have learned to exploit. Bad actors study these security improvements, adapt their techniques, and evolve their cloaking methods to stay ahead of these signature- and emulation-based detection methods.

Cloaking damages publisher brand reputation and visitor trust by allowing bad actors to get their malicious creatives in front of visitors on trusted sites, bypassing the very security measures designed to prevent such attacks. Higher success rates, extended campaign lifespans, and reduced detection risk make cloaking a worthwhile investment for bad actors. Sophisticated cloaking infrastructure delivers superior returns across all metrics that matter to malicious actors.

The Ultimate Deception Requires Ultimate Vigilance

In this digital age of Jekyll and Hyde advertising where ads conceal their malicious nature behind respectable facades, detecting and fighting cloaking requires moving beyond traditional security approaches that rely on static analysis and predictable sandbox environments. Publishers and platforms need adaptive protection that can see through environmental deception and catch attacks in real time. This demands embracing the behavioral detective mindset: thinking like malicious actors and developing defenses that evolve as quickly as the threats themselves. Only solutions that can see both faces of these deceptive creatives will successfully protect publishers and visitors alike.

HUMAN Malvertising Defense

Combating cloaking’s sophisticated deceptions requires more than traditional security approaches can deliver. HUMAN’s proven Malvertising Defense solution combines expert threat research, real-time environmental simulation, and specialized behavioral analysis that can expose the sophisticated deceptions behind the masks bad actors wear.

The key advantage lies in our runtime behavioral analysis approach. Rather than relying on static blocklists or predictable sandbox environments, HUMAN’s Malvertising Defense observes how ads behave in real-time, powered by advanced machine learning, real-time threat intelligence, and years of expert research. Cloaking attempts are caught and blocked the moment they reveal their true malicious natures in the real world.

To learn more about HUMAN Malvertising Defense, click here to talk to a Human.

Block Malvertising Threats in Real-Time

HUMAN gives publishers real-time visibility to detect and block malvertising threats before they reach your users—completely free