Last year, billions of music streams were consumed by bots, diverting millions in royalties away from real artists.

This is streaming fraud; it occurs when artificial plays are generated to mimic genuine listener engagement, such as through bots or listener farms looping tracks to collect royalties without any real audience. And with the rise of generative AI, this problem is about to get exponentially worse.

In this Satori Threat Intelligence post, we’ll break down how fraudsters are using generative AI to mass-produce music, generate fake streams, and manipulate playlists, and how the industry can fight back.

The Micro-Cents Paradox: Why Streaming Fraud is So Profitable

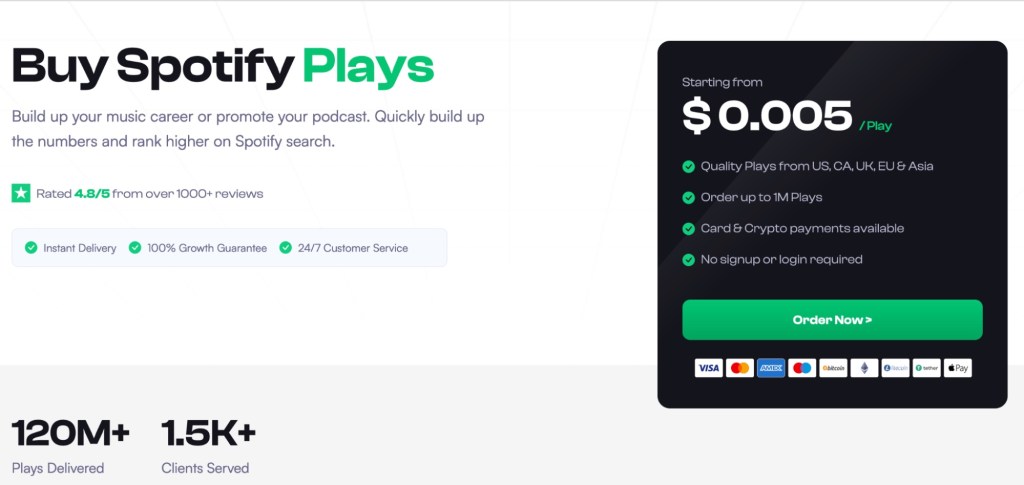

At the center of most large-scale streaming fraud schemes are organized fraud operators, ranging from individual actors to well-resourced organizations/groups. When a track is streamed, the payout to the rightsholder is typically around $0.003−$0.005 per play. This minuscule payout may seem insignificant, but when a threat actor can generate millions of tracks and billions of streams, the potential profit escalates exponentially. This misuse of royalty funds directly impacts the livelihood of legitimate artists, songwriters, and rightsholders, as fraudulent claims dilute the limited amount of royalty funds.

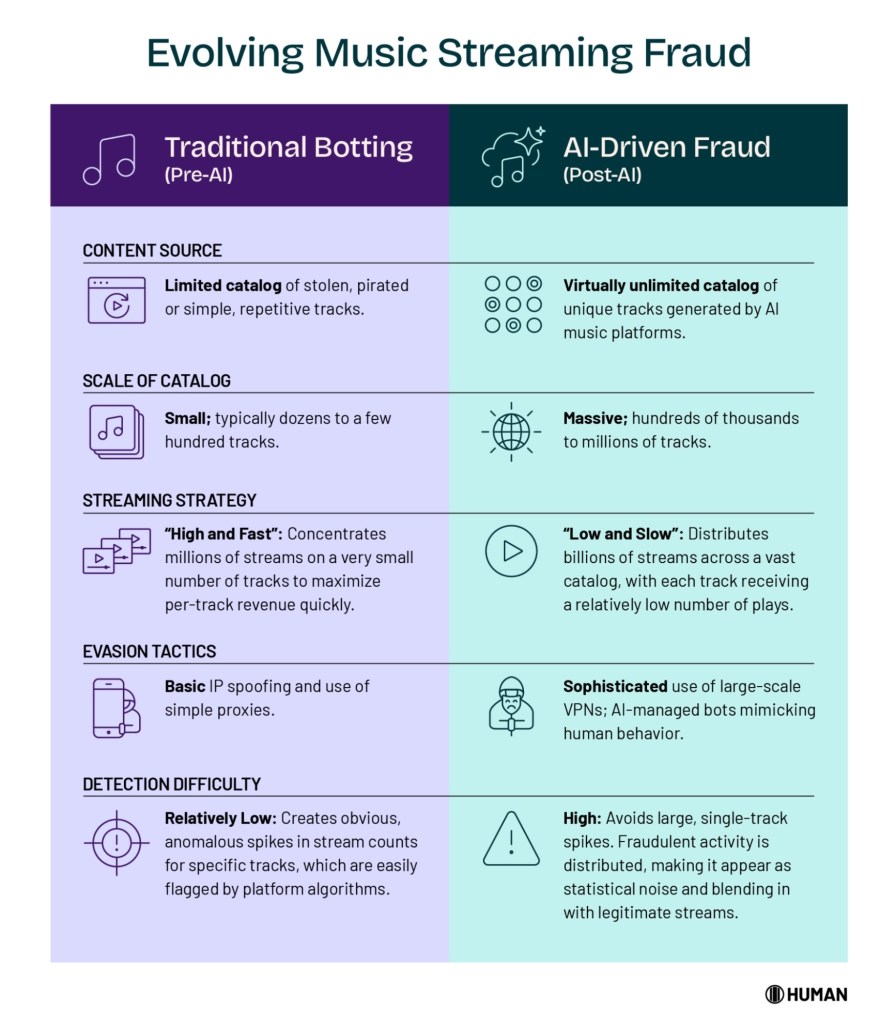

Historically, the biggest obstacle for would-be fraudsters was producing enough music to make streaming scams profitable. Writing, recording, and uploading tracks required significant time and skill. Generative AI has eliminated that barrier to entry, allowing threat actors to create thousands of unique, royalty-eligible tracks in minutes. This shift has turned streaming fraud from a niche scheme into a large-scale operation. But how exactly do threat actors perform streaming fraud at a technical level?

The Tactics Powering Streaming Fraud

There are two key components to a successful streaming fraud operation: first is the scalable creation of content using AI, and second is the generation of seemingly authentic traffic with fake engagement such as likes, views, comments, and clicks, all designed to mimic legitimate user behavior to evade detection.

Content Generation: AI as the New Music Mill

AI has created a fundamental shift in streaming fraud as threat actors can now generate a near infinite supply of content for pennies. While threat actors have historically used simple, repetitive loops, HUMAN has seen an overall move towards the use of advanced generative AI models. These models can produce thousands of unique tracks in passively consumed genres like ambient, lo-fi, and instrumental music.

HUMAN’s Satori team is seeing threat actors use AI to create large playlists of generated tracks under dozens of fake artist names, where each track is subtly different, to make it bypass basic audio fingerprinting detection. As detection methods improve, fraudsters also evolve— we have already seen AI music employ more sophisticated techniques to mimic human composition, making it even harder to distinguish between legitimate artistry and fraudulent content. This content can include:

This content is then uploaded to major streaming platforms like Spotify, Apple Music, or YouTube through digital distribution services, often using numerous fake artists or creator profiles.

Generating Fake Traffic and Engagement

Once the content is uploaded, the next step is to make it appear popular. Threat actors employ several methods to generate fake traffic and engagement, including tools and techniques such as traditional botnets, fake accounts and account takeover, click farms, and proxies. Fraudsters also employ streaming-specific techniques such as playlist manipulation and “low and slow” playback methods:

In addition, threat actors use open platform distributors like DistroKid or TuneCore to upload AI-generated content to Spotify, Apple Music, and others, often creating numerous fake artist profiles.

How Fraudsters Manage Botnets with AI

The role of AI extends beyond content creation; sophisticated fraudsters now use AI to manage the bot networks themselves. These AI systems can automate the process of spoofing digital identities, rotating through proxies and VPNs to simulate geographically diverse human listeners and mimicking human-like listening patterns to better bypass anti-fraud detection systems employed by streaming platforms. This creates a dynamic where AI is not only the factory for the fraudulent product (the music) but also the engine for its fraudulent consumption (the streams):

Listener Farms & Automated Playlist Stuffing

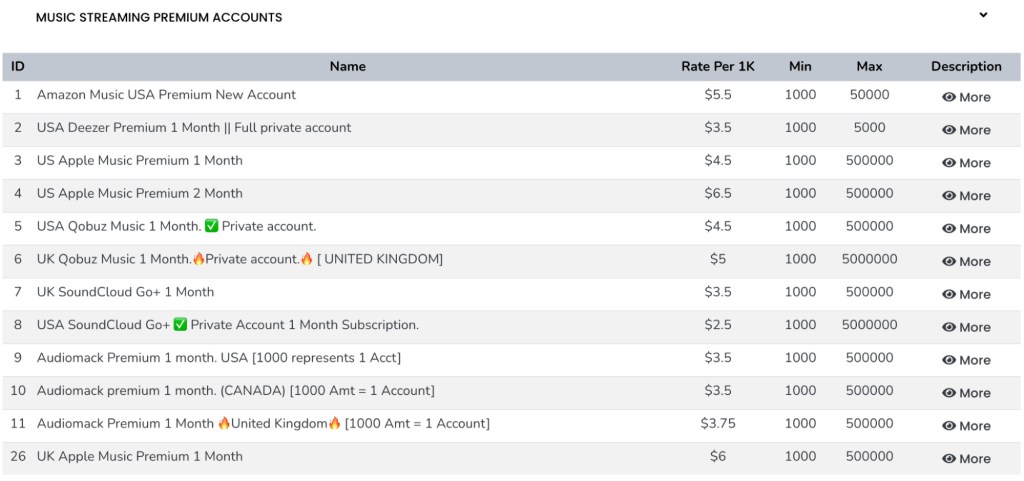

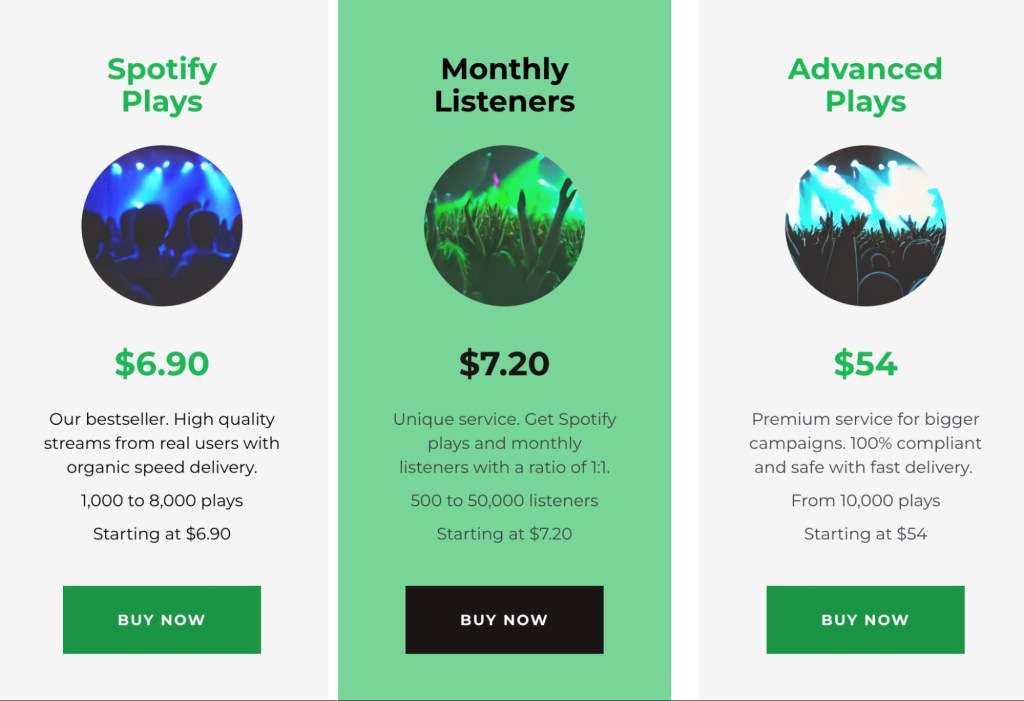

On underground marketplaces, including the dark web and private forums, threat actors offer a range of sophisticated services designed to generate fraudulent streams. These offerings include everything from selling pre-loaded, “ready to use” accounts on major streaming platforms to comprehensive fraud-as-a-service solutions.

At the core of these operations are listener farms, which, unlike purely bot-based traffic, utilize large arrays of real physical devices, often hundreds of smartphones programmed to stream music 24/7.

To evade detection and appear legitimate, these farms are equipped with advanced botnets that leverage residential proxies, VPNs, Selenium/Puppeteer, and others. This technique makes fraudulent activity appear as legitimate streams originating from countless different IP addresses across various geographic locations. The result is a scalable, high-volume fraud infrastructure that clients can hire for two primary purposes: to artificially inflate artist’s popularity metrics or to directly siphon royalties from the platform’s pro-rata shared pool, diverting income from legitimate creators.

Fraudsters also perform playlist stuffing in which they create playlists with popular-sounding titles (such as “Rainy Day Lo-Fi”, “Focus Music for Studying”) and populate them with their AI-generated tracks alongside a few legitimate hits. Then they use listener farms to drive traffic to these playlists, which can occasionally trick the platform’s algorithm into recommending them to real users.

How to Identify Nonhuman Artists

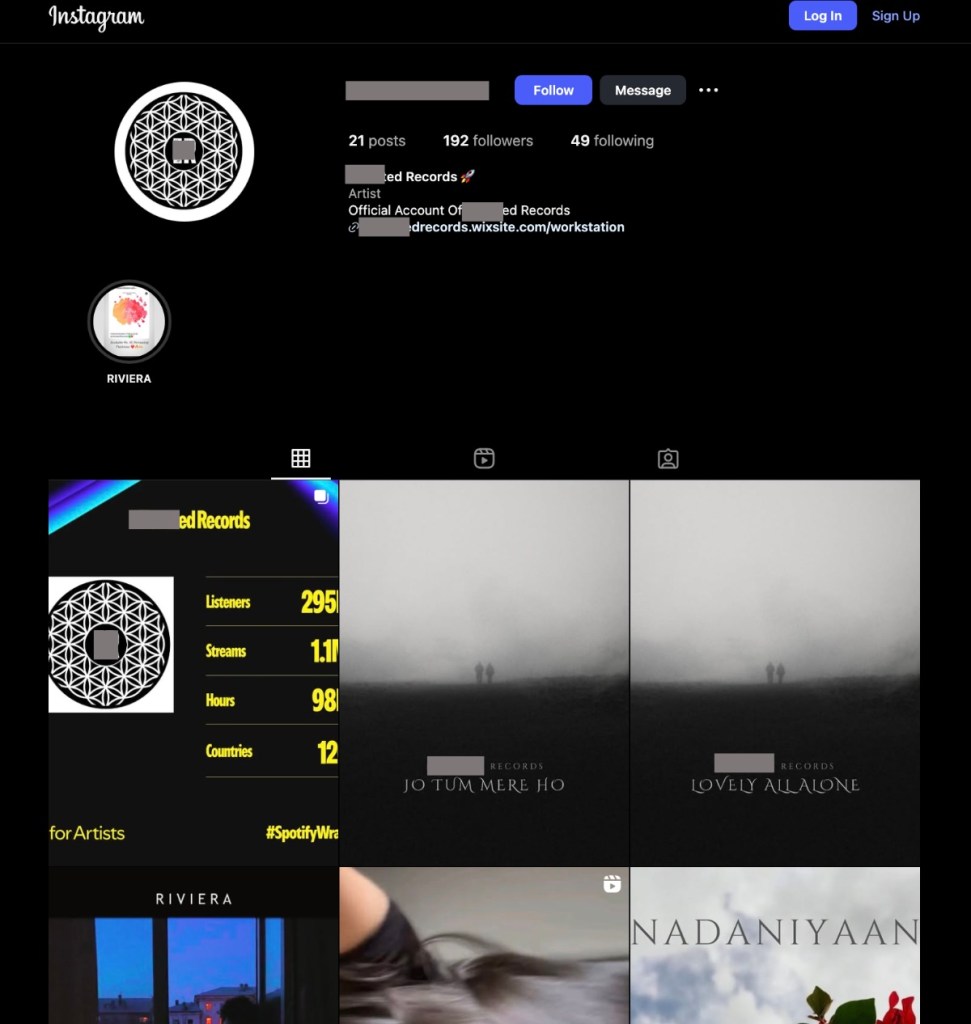

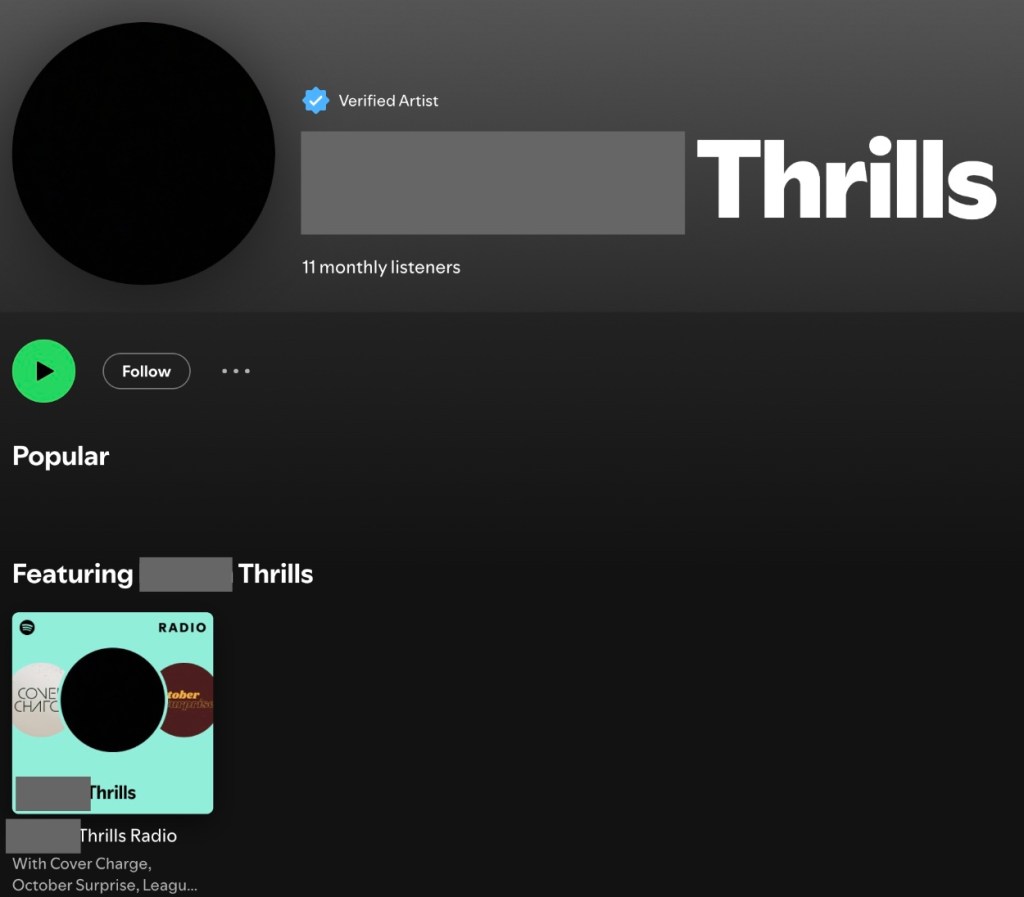

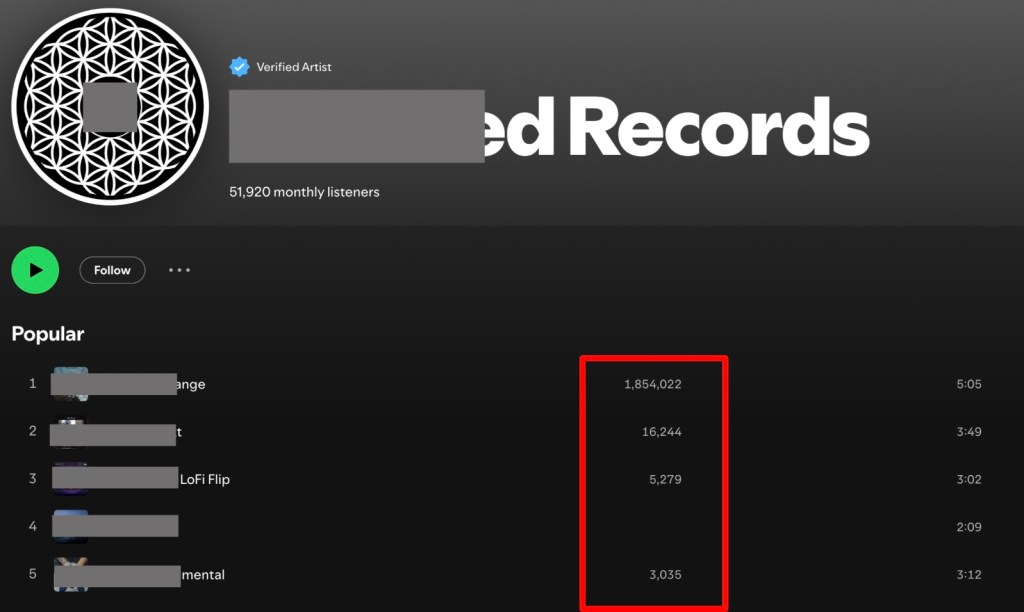

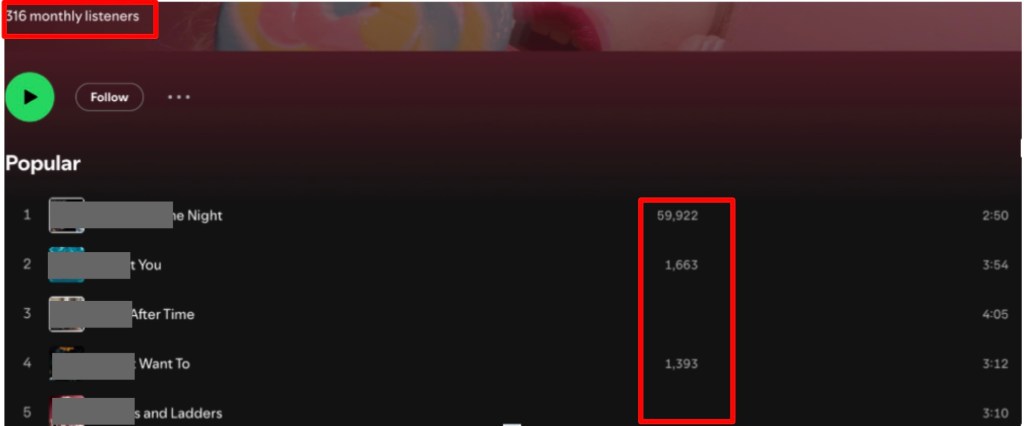

The most telling characteristic of a fraudulent or nonhuman artist is their existence as a “digital ghost”—phantoms who exist only within the streaming platform’s ecosystem:

Beyond profiles, streaming platform data is a powerful diagnostic tool for identifying potential artificial streaming activity, which can include:

How the Industry is Fighting Back

The music industry is joining forces to battle this growing threat. A notable initiative is the formation of the Music Fights Fraud Alliance, a coalition of major and independent record labels, distributors and streaming services. This alliance includes leading organizations such as Universal Music Group, Warner Music Group and Sony Music Entertainment, alongside Spotify, Amazon Music and YouTube Music. Through coordinated information sharing and joint strategies, the alliance seeks to enhance the identification and prevention of fraudulent activities within digital streaming.

Into the Future

While AI presents exciting creative possibilities for musicians, it also plays a major role in streaming fraud, which is set to escalate, largely driven by advancements in generative AI. Rather than the current trend of low effort, generic tracks flooding platforms, we are likely to encounter more highly convincing deepfakes, AI-generated audio and video that closely emulates the unique styles of real popular artists, directly challenging the integrity of royalty distribution.

Fraudulent operations will also evolve, deploying sophisticated bots that replicate nuanced behaviors of genuine listeners. These bots will no longer simply inflate play counts; instead, they will curate seemingly authentic listening histories, interact with playlists, and alternate between devices, making themselves almost indistinguishable from legitimate users.

Moreover, the scope of these threats is expanding beyond music into podcasts and other similar formats, where artificially inflated audience metrics could mislead advertisers and falsify revenue streams. In response, streaming platforms will be forced to adopt advanced AI-driven detection methods—such as content analysis and behavioral biometrics—moving well beyond traditional bot-detection strategies. There may also be a need to reconsider and redesign royalty models to safeguard the creative economy in the face of these evolving automated threats.

The fight against AI-driven streaming fraud is not just about protecting royalties, it’s about preserving the value of human creativity in music.

This post is part of our Satori Perspectives series, where HUMAN’s threat researchers share timely insights into the tools, tactics, and procedures shaping the threat landscape.

Explore more research and intelligence from the Satori team here.

Your Industry Guide to Click Fraud in 2025

Understand evolving fraud tactics, see where vulnerabilities exist, and learn how to protect ad spend across the ecosystem.