The rapid evolution of artificial intelligence is creating new classes of sophisticated, automated traffic. While much attention has been paid to AI-driven crawling and content scraping, the most significant long-term shift is the rise of autonomous AI agents, systems that can reason, plan, and execute complex goals on behalf of a user.

Agentic AI creates a new question for security, fraud, and detection teams: as these agents interact with websites and APIs, how can we distinguish between legitimate, productive automation and wasteful or malicious activity?

To answer this question, HUMAN’s SATORI Threat Intelligence and Research teams have invested heavily in building visibility into the AI automation landscape, with a particular focus on AI agents.

In our research, we found that AI agent traffic is detectable today using updated versions of well-known techniques. We’ll share our method, detection signals we’ve observed in the wild, and a three-step model to enforce based on intent and impact, not origin.

Our Research Methodology

In order to identify unique signals to identify specific AI agents or AI-driven traffic, we generated real sessions through various AI services and examined their behavior in controlled environments.

These findings were translated into structured detection signals and analyzed across two primary data sources:

By correlating signals across these environments, we identified recurring patterns, attribution markers, and detection opportunities specific to AI agents in order to augment our ability to identify and provide visibility into AI-driven traffic.

New Traffic, Familiar Techniques

While these agents represent a new class of automation tools, our research shows they do not rely on new, previously unseen automation techniques. Instead, AI agents excel at leveraging well-known automation strategies such as automation libraries, simulated mouse movements, sandboxed browser environments, and long-standing evasion or spoofing tactics.

Old Methods, Renewed Perspective

In the following sections, we’ll highlight well-established automation techniques that are actively used by both openly-declared and stealth AI agents. While these methods are not new, our findings show that AI agents are leveraging them in increasingly efficient and adaptive ways. Revisiting these techniques with a fresh perspective enables classic antibot detection mechanisms to be repurposed for detecting and gaining visibility into AI agent activity.

Detecting and Managing AI-Generated Traffic with Context-Aware Signals

A common question for defenders is whether autonomous AI traffic should be easy to identify using simple indicators like IP address or user agent. In some cases, these signals can provide useful clues. In practice, however, they rarely tell the full story.

Our research shows that modern AI agents often reuse familiar automation techniques while varying how they present themselves across sessions. Some execute from stable infrastructure, while others inherit consumer device characteristics, rotate identifiers, or operate within full browser environments designed to closely resemble real users. As a result, effective detection depends on evaluating how traffic behaves, not just where it appears to come from.

Rather than relying on any single indicator, HUMAN approaches AI agent detection through layered, context-aware analysis. By correlating network properties, browser authenticity, and interaction behavior, defenders can distinguish between human users, benign automation, and autonomous agents – even as AI agent implementations evolve.

Key Signals Used to Differentiate Human and AI-Generated Traffic

| Signal Category | What HUMAN Evaluates | Human Traffic Characteristics | AI Agent Characteristics |

| Network Context | IP origin, stability, and routing patterns over time | Diverse consumer networks with natural variability | Cloud, hosted, or inherited environments with detectable patterns or reuse |

| Browser Authenticity | Consistency between claimed browser version and actual execution behavior | Matches known browser release characteristics | Instrumented or emulated browsers with subtle inconsistencies |

| Execution Environment | Presence of automation artifacts, injected scripts, or helper components | Native browser execution without instrumentation | Sandboxed, headless, or tool-assisted environments |

| Interaction Behavior | Timing, variability, and continuity of user inputs | Organic, imprecise, and adaptive behavior | Programmatic precision, repetition, or unnatural consistency |

| Session Evolution | How signals change as the session progresses | Gradual, human-like variation | Static or mechanically adjusted signals across actions |

Detecting Automation Libraries

An automation library (also known as an automation framework) is a tool developers use to programmatically control browsers or applications for automation testing, web scraping, or simulating user interactions. Common examples include Selenium, Puppeteer, and Playwright.

These tools can simulate nearly every aspect of a legitimate session, including mouse movements, clicks, typing behavior, and interactions with page elements. They can also spoof environmental signals such as browser version, operating system, screen resolution, and even geolocation.

Attackers have historically leveraged this ability to mimic real user behavior to bypass antibot systems and carry out advanced automation-based attacks at scale. In response, modern antibot solutions have developed techniques to detect when a session is powered by such frameworks.

One common method involves executing JavaScript-based detection in the client’s browser to identify inconsistencies associated with automation. For example:

Many AI agents rely on traditional automation libraries to carry out their tasks. Therefore, detection strategies developed for classic automation remain relevant, provided they are updated and adapted to the evolving sophistication of AI-driven behaviors, enabling security teams to build and adapt workflows for reviewing, triaging, or responding to agent-driven activity.

HUMAN’s research team analyzed a broad range of AI agents and found that all of them rely on one or more of three major automation frameworks, Selenium, Playwright, and Puppeteer, most commonly Playwright. While some agents use large language models (LLMs) to more efficiently translate user prompts into automation scripts — or combine multiple frameworks to execute complex tasks — they ultimately run those tasks through the same automation engines that have been known and detectable by antibot systems for years.

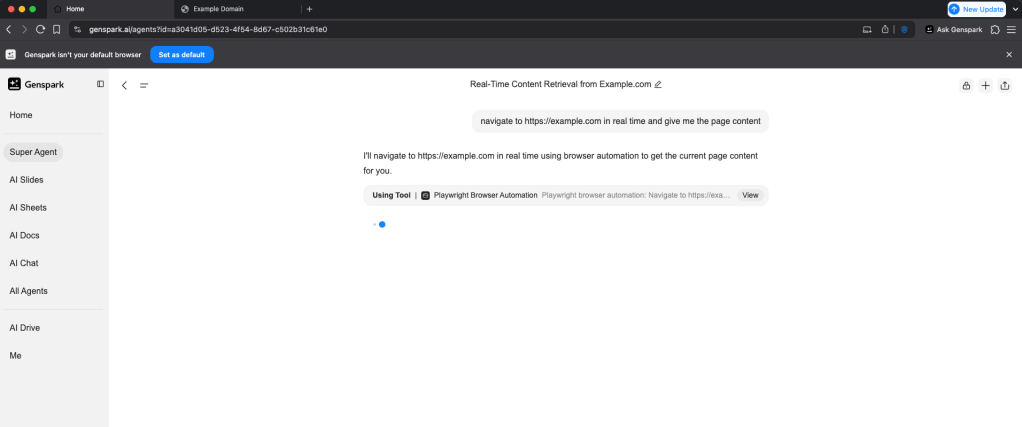

Case Study: Genspark

For example, Genspark is an AI agent engine that generates real-time custom pages, known as Sparkpages, in response to user queries. When a user submits a prompt, Genspark launches a browser session, opens a new tab, follows the path to the requested website, and interacts with the page on the user’s behalf.

Behind the scenes, each of these actions is translated into executable code using the Playwright framework—a process Genspark explicitly discloses during prompt execution.

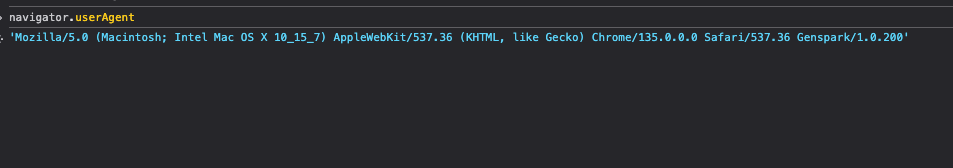

Genspark executes user prompts by launching and interacting with a browser session — a process that consistently includes the Genspark-specific user agent, as can be seen in Figure 1. Genspark explicitly states that it is using Playwright Browser Automation to carry out a scraping request we instructed it to perform (Figure 2).

Figure 1: Genspark UserAgent string.

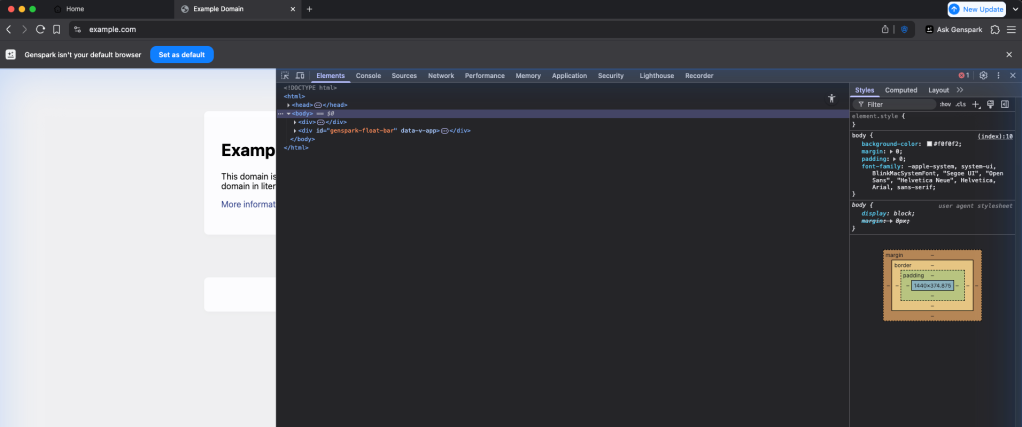

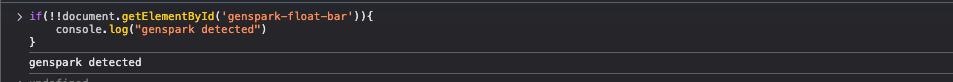

When we inspected these sessions, we observed that every webpage opened by Genspark includes a custom HTML element, which identifies these requests as unique from other Playwright requests: a <div> with the ID “genspark-float-bar” (Figure 3). This element is injected into the DOM (Document Object Model) as part of the automation process.

It provides a reliable indicator that the session was generated using an automation framework, specifically, Genspark’s AI agent, powered by Playwright, allowing us to detect Genspark-generated requests.

While most AI agents appear to rely primarily on Playwright as their automation framework, each tends to inject its own unique JavaScript elements or DOM modifications during execution. These elements, combined with Playwright’s built-in functions, result in distinctive behavioral or structural fingerprints for each agent.

Defenders can flag and attribute traffic generated by a particular AI agent based on these unique properties or specific combinations of properties, enabling targeted visibility and enforcement, similar to how traditional antibot systems have long detected and mitigated automated traffic based on framework-specific signals.

Fingerprinting Agent Browser Extensions

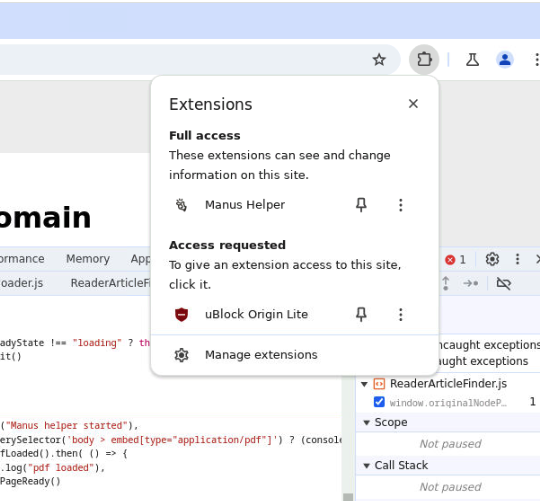

Many AI agents (including Genspark) execute user prompts by launching packaged browser environments, loading the target page, navigating links, and simulating clicks and keystrokes. These browser environments often also include a proprietary helper extension that is unique to the agent. By detecting traffic with signals from that specific extension, you can tag the session as likely AI agent traffic, enabling security teams to build and adapt workflows for reviewing, triaging, or responding to agent-driven activity.

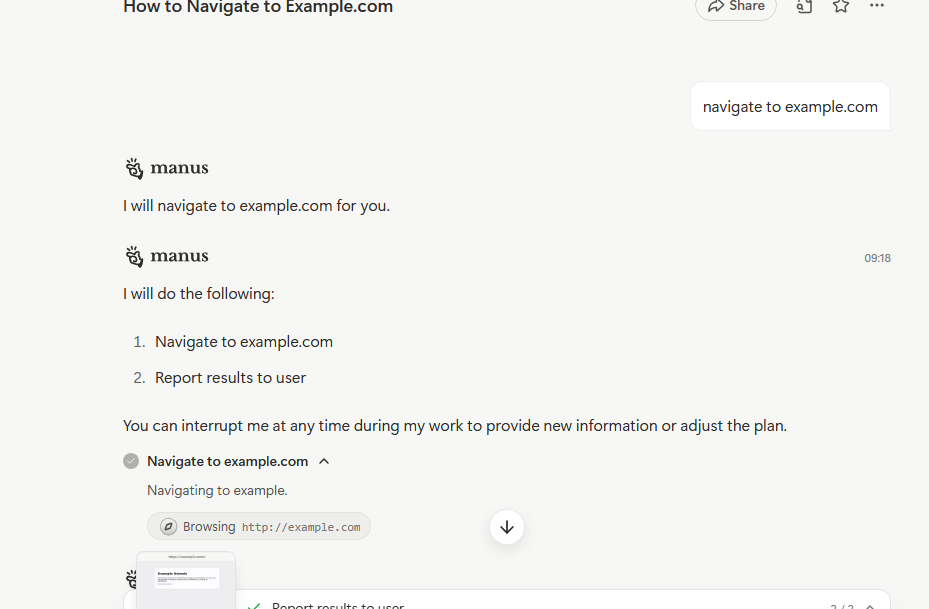

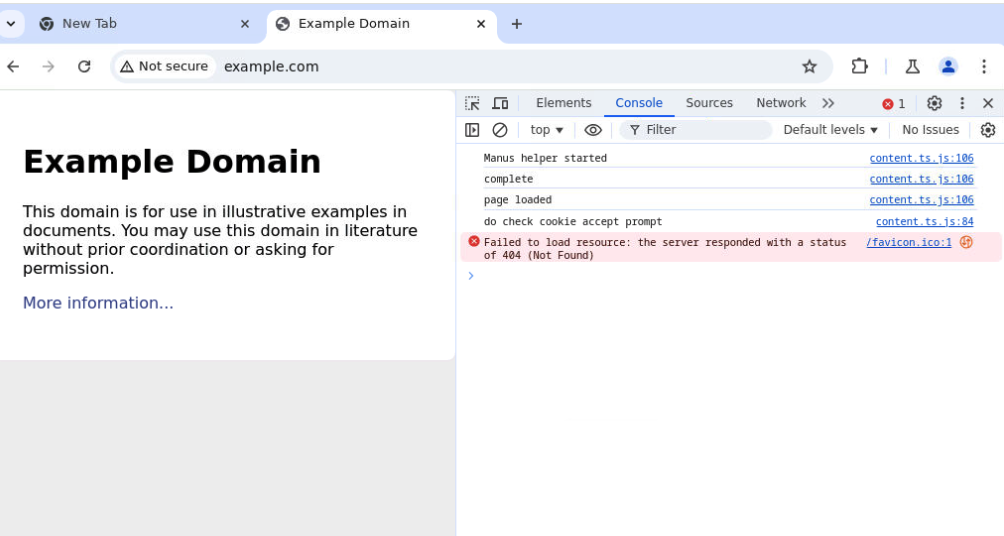

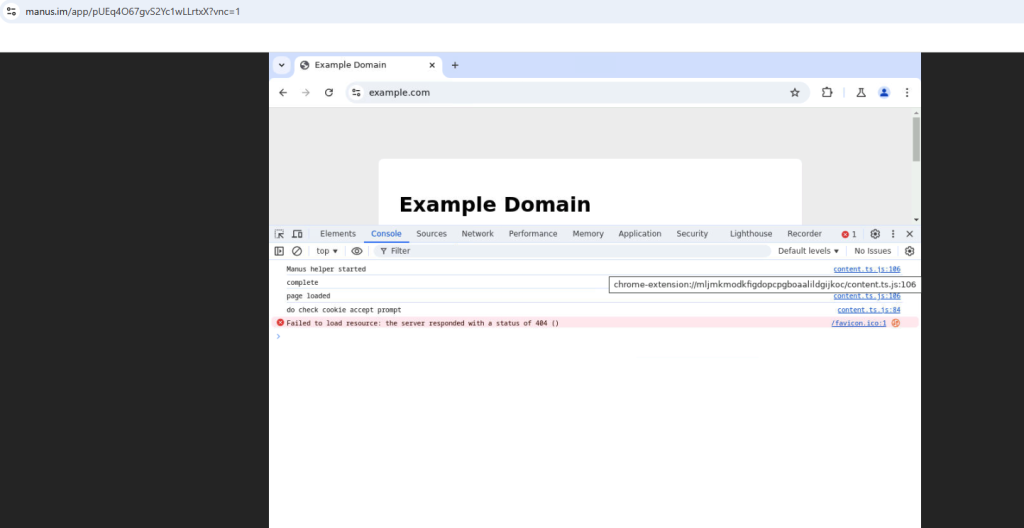

For example, when a user instructs Manus to navigate to a specific website, Manus launches its browser environment (as seen in Figures 5 & 6) and executes the requested prompt.

Inspecting the browser environment upon completion shows that Manus used an additional JS file named content.ts.js, related to a Chrome extension, alongside a log mentioning that the “Manus Helper started” (Figure 7).

This is the Manus Helper extension built into the Manus browsing environment that extracts content from web pages and interacts with them programmatically.

We can pair this extension signal with Manus-specific window properties to flag a session as Manus traffic. Then, we can make a fetch request to check for the Manus Helper extension, using the code below:

async function detectManusExtension() {

const extensionID = "mljmkmodkfigdopcpgboaalildgijkoc";

const knownResource = "content.ts.js";

const url = `chrome-extension://${extensionID}/${knownResource}`;

try {

const response = await fetch(url, { method: "GET" });

if (response.status === 200) {

console.log("Manus extension detected via fetch");

return true;

} else {

console.log("Manus extension not detected");

return false;

}

} catch (err) {

console.log("Manus extension not detected (fetch error):",err.message);

return false;

}

}

We can confidently treat any session that shows the Manus-specific window properties and a positive hit on the Manus Helper extension as Manus traffic.

Synthetic Mouse-Move Signatures

One interesting looking glass to examine bots through is their “mouse” movement patterns. Since they have no actual Human Interface Device (HID) like a keyboard or mouse, everything happens through emulation via various automation tools. All of these tools have fingerprints that allow us to see if the entity behind the screen is a human or just a bot controlled by an agent.

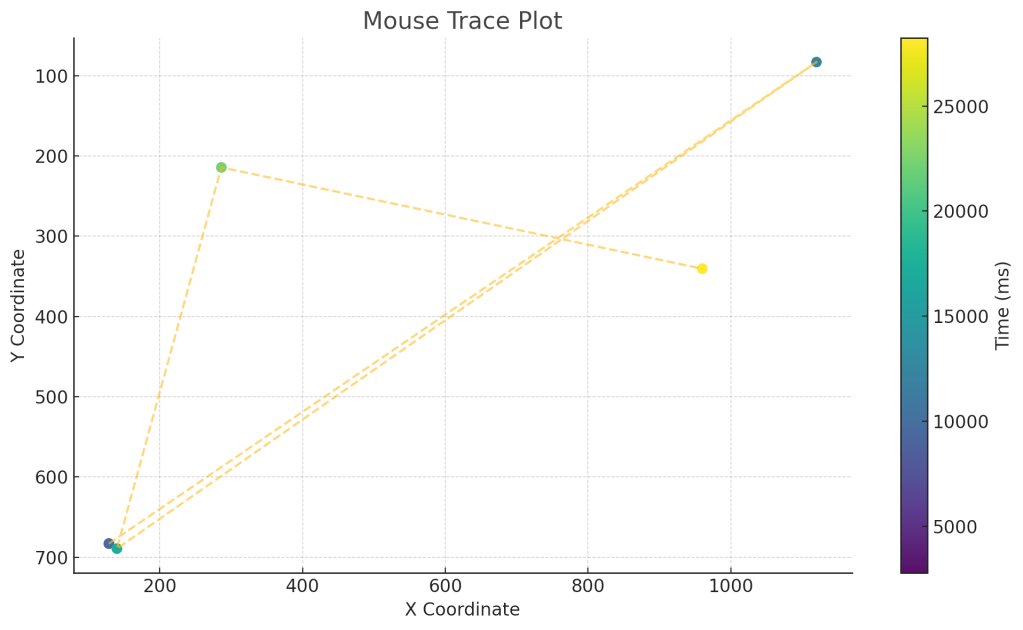

Let’s take ChatGPT Agent for example. These are the mouse traces when asked to navigate on a webpage that requires it to click 5 boxes randomly positioned: Comparing human vs agent mouse movement patterns reveals unique advantage for detection.

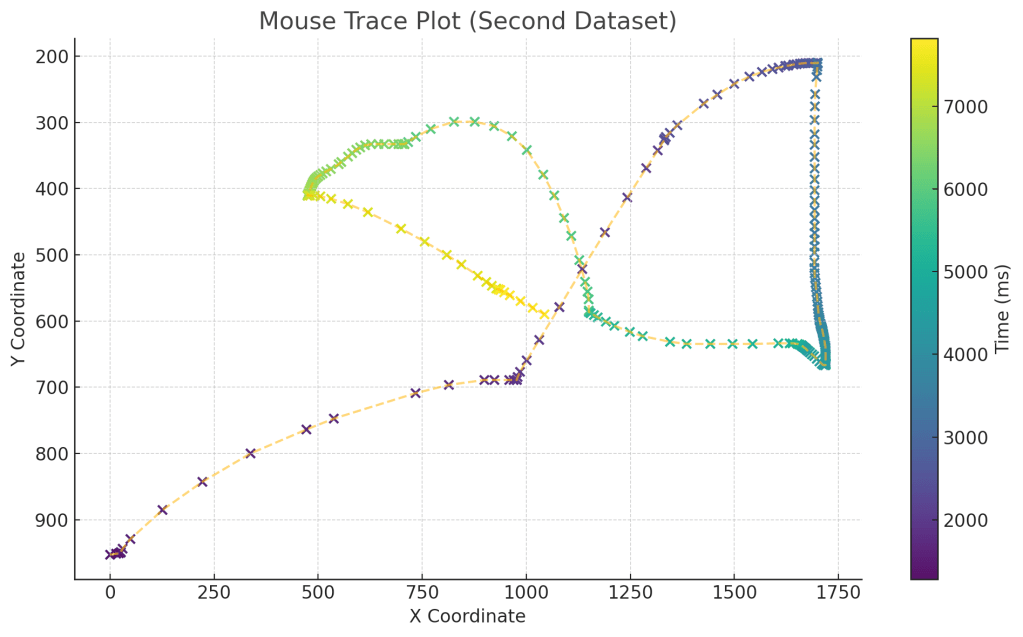

Now compare this to a human that was tasked with the same challenge:

We can clearly see how humans are “messier” and less predictable in their movement. If we dive down even further, we can even see that the agent always moves its mouse in increments of 0.25 pixels! Just take a look at the X-axis coordinates:

[128.75, 128.75, 128.75, 128.75, 128.75, 128.75, 128.75, 128.75, 128.75, 128.75, 1120, 1120, 140, 140, 140, 140, 286.25, 286.25, 286.25, 286.25, 960, 960, 960, 960]

Now the Y-axis:

[683, 683, 683, 683, 683, 683, 683, 683, 683, 683, 83, 83, 689.25, 689.25, 689.25, 689.25, 214.25, 214.25, 214.25001525878906, 214.25, 340.5, 340.5, 340.5, 340.5]

Uncanny to say the least! Human movements have a lot more “flourish” to them and don’t just jump to the point they have to click. By leveraging these kinds of subtle behaviors, we are able to determine whether a user is a human or a bot.

Detecting and Managing AI-Generated Traffic with Context-Aware Tools

So now that we have visibility into AI-generated traffic, what do we do with it? Well, that depends on the context.

AI traffic isn’t simply “good” or “bad.” A blanket ban on all AI traffic can block off real benefits, such as agentic commerce or LLM answers that surface your brand. On the other hand, simply allowing all AI to access your properties can open them up to abuse, misuse, and waste.

Instead, we at HUMAN advocate for a visibility-first model that leads to intent and context-aware enforcement decisions. This approach is based on 3 steps:

If traffic is safe and beneficial, it should be allowed. If it’s malicious, abusive, or misaligned with business goals, it should be blocked.

This model protects digital environments without stifling innovation. AI tools are here to stay — and rather than treat them as threats by default, we aim to observe, understand, and act based on what they do, to enable trusted automations, and block harmful ones.

That’s why we built AgenticTrust, a new module in HUMAN Sightline Cyberfraud Defense, built to give you visibility, control, and adaptive governance over agent-based activity in your websites, applications, and mobile apps.

AgenticTrust gives security and fraud teams continuous, real-time visibility into every AI agent session, classifies intent as the session unfolds, and enforces adaptive policies that change with the agent’s actions—not a one-time verdict. Key capabilities include persistent monitoring across the full customer journey, reporting and analytics on agent activity, rule-based privilege and permission management, and rate-limited or blocked actions when an agent drifts out of bounds. To learn more, request a demo.