When OWASP launched the Agentic Security Initiative, its first major output was a threat-modeling taxonomy for Agentic AI: a long list of ways autonomous agents can be misused, subverted, or simply go off the rails. That work was intentionally broad. It gave builders and defenders a shared language for thinking about agentic risk, and in a previous post we walked through that Agentic AI Threats & Mitigations list (T01–T17) in detail.

The new OWASP Top 10 for Agentic Applications 2026 plays a narrower, more operational role. Instead of mapping the entire attack surface, it elevates the risks that are already driving real incidents as organizations move agents from pilots into production.

If you’re building agents, this Top 10 is now required reading for secure design and deployment. But the implications do not stop with the teams building agent orchestration frameworks and toolchains. Even if you never ship an agent yourself, your applications are increasingly on the receiving end of agentic behavior: AI assistants, LLM crawlers, and automated browsers that navigate, transact, and make decisions against your public apps and APIs.

Many of the risks in the OWASP Top 10 for Agentic Applications 2026 show up as autonomous traffic whose goals have been hijacked, whose tools are being misused, or whose behavior has drifted into “rogue” territory while still looking superficially legitimate.

In the rest of this post, we look at OWASP’s new list through that lens: what each risk means in practice, and how organizations can apply these ideas even when the agents are not under their control.

First principles: Least-agency and observability are table stakes

Before OWASP dives into individual risks, the Top 10 foregrounds two design principles. They are framed for people building agentic systems, but they are just as important for organizations that simply have to live with agentic traffic hitting their properties. In this context, these are the fundamental controls to safe interaction with agentic traffic.

Least agency: constrain what agents are allowed to do

For builders, the principle of “least agency” means not giving agents more autonomy than the business problem justifies. For those on the receiving end of agents, it translates into a simple rule:

Let agents do the narrowest set of actions they need to do in order to provide value to you and your users, and no more.

For example, an agent conducting product research and price comparison does not need permissions to login and edit account details.

The goal is not to block all agents. It is to ensure that beneficial use cases (shopping assistants, travel planners, research copilots) can succeed without granting them enough freedom to scrape, arbitrage, or exhaust your systems.

Strong observability: see and govern how agents behave on your properties

“Strong observability” in OWASP’s framing is about seeing what agents are doing, why they are doing it, and which tools and identities they are using. If you do not control the orchestration layer, you still need a version of that visibility at your own boundary.

From the receiving end, that means:

- Distinguish humans, simple scripts, and agents. You need to be able to tell when traffic is coming from an LLM-powered agent or automated browser, not just a generic “bot” bucket.

- Track behavior as sequences, not isolated requests. An individual request may look fine; the pattern over a session tells you whether an agent is acting like a shopper, a scraper, or a fraud tool.

- Attach governance to what you observe. When an agent crosses certain behavioral lines (inventory scraping, high-risk enumeration, abnormal checkout patterns), you should be able to slow it down, challenge it, or block it entirely, without breaking legitimate flows.

- Preserve an audit trail. You need enough telemetry around agent sessions to investigate incidents that involve AI-driven traffic: which endpoints were used, what was accessed, how decisions escalated, and how that differed from normal agent or human behavior.

Least agency without observability is blind risk reduction: you limit features but cannot see where agents are still causing harm. Observability without least agency is just surveillance: you watch agents overreach in real time but have not constrained what they are allowed to do.

The point of combining the two is straightforward: grant external agents the minimum necessary agency to be useful, and monitor them closely enough to enforce that boundary when they start to behave like the ASI risks OWASP calls out.

OWASP’s Top Ten Risks for Agentic Applications

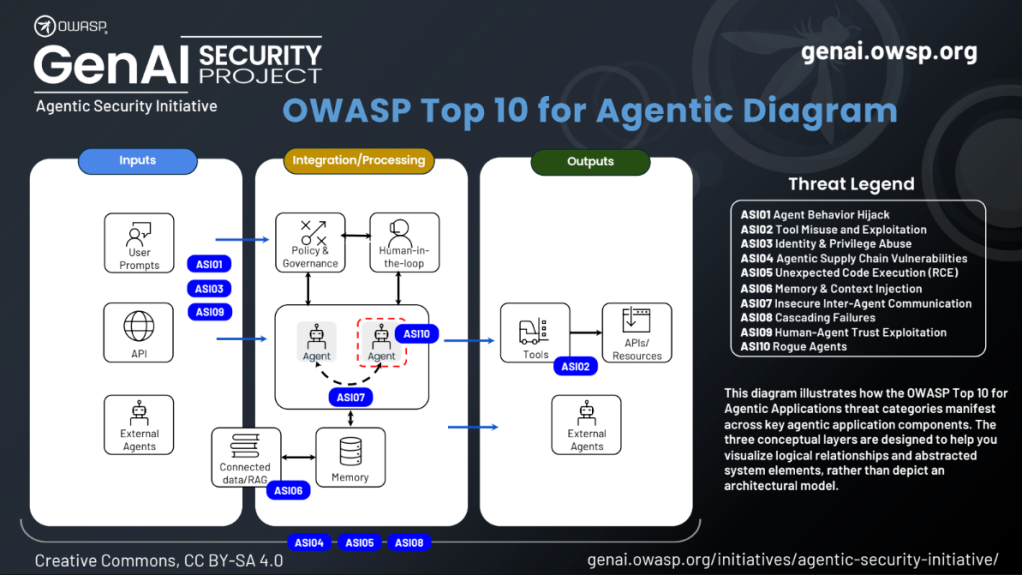

Each item in the new Top 10 corresponds to a distinct failure mode in the agentic stack, from corrupted inputs to misaligned planning to unsafe tool execution. OWASP’s model of the agent pipeline makes the pattern clear: when autonomy, memory, and tool use intersect, failures are no longer isolated mistakes but system-level behaviors with real consequences.

For organizations on the receiving end of agentic traffic, those same failure modes surface as behavioral patterns such as agents scraping, probing, escalating, or drifting off-task, even when the underlying agent architecture is completely outside your control.

| OWASP ASI Risk | What it means in agent pipelines | How it appears to defenders |

| ASI01 – Agent Goal Hijack | The agent’s goals are redirected | The agent conducts harmful or malicious actions under the guide of legitimate flows |

| ASI02 – Tool Misuse | Misuse of internal/external tools | Spike in API usage, unexpected tool chaining, unusual transaction patterns |

| ASI03 – Identity & Privilege Abuse | Escalation through delegated identities | Agents act beyond normal user capabilities; lateral movement-like behavior |

| ASI04 – Supply Chain Vulnerabilities | Compromised tools/plugins | Clean-looking agent suddenly using malicious toolchains |

| ASI05 – Remode Code Execution (RCE) | Unintended code execution | Agents submit unusual payloads, execute unexpected external calls |

| ASI06 – Memory Poisoning | Persistent misalignment | Repeated wrong or adversarial behaviors across sessions |

| ASI07 – Insecure Inter-Agent Comms | Message spoofing/tampering | Traffic patterns inconsistent with a single agent identity |

| ASI08 – Cascading Failures | Multi-agent amplification | Rapidly repeating requests |

| ASI09 – Human – Agent Trust Exploitation | Social engineering via agents | Legit-looking flows that pressure users into harmful approvals |

| ASI10 – Rogue Agents | Fully misaligned autonomous behavior | Agents behaving like insiders; broad access attempts, unpredictable sequences |

Below is a concise view of each ASI risk and why it matters beyond pure engineering teams.

Your Guide to Safely Adopting Agentic Commerce

See how AI agents are changing discovery and purchase, explore the emerging trust frameworks, and learn what readiness looks like for the agent-driven economy.

1. ASI01 – Agent Goal Hijack

Agents can have their goals and decision logic silently redirected by prompt injection, poisoned content, malicious emails, or crafted documents. The agent still “looks” on task, but it is now serving the attacker’s intent instead of the user’s.

Why it matters to you: A third-party shopping or finance agent interacting with your site could be induced to exfiltrate pricing, inventory, or customer data via your own interfaces. You never see a vulnerability exploit in the traditional sense; you just see a “legitimate” agent making a series of malicious choices.

2. ASI02 – Tool Misuse and Exploitation

Agents often have access to powerful tools (email, CRM, billing, shell, cloud APIs). Even when access is technically “authorized,” the agent can be steered into using these tools in unsafe ways: chaining internal and external tools, issuing destructive commands, or abusing high-cost services.

Why it matters to you: If an external agent is allowed to use your public APIs or low-risk tools without any guardrails, it can still cause outages, spend spikes, or large-scale scraping — all via interfaces you intentionally exposed.

3. ASI03 – Identity and Privilege Abuse

Agent identity is messy. Agents inherit user roles, cache credentials, and call each other. Attackers exploit this delegation chain to escalate privileges, reuse cached secrets, or trick a high-privilege agent into acting on a low-privilege request.

Why it matters to you: When an agent is allowed to act “as the user,” you effectively extend that user’s blast radius to any system the agent can talk to. Without separate identities and session boundaries for agents, you cannot enforce true least privilege or reconstruct what happened after an incident.

4. ASI04 – Agentic Supply Chain Vulnerabilities

Agents are built from third-party components: tools, plugins, prompt templates, MCP servers, agent registries, RAG connectors. If any of these are malicious or compromised, they can inject instructions, exfiltrate data, or impersonate trusted tools at runtime.

Why it matters to you: You may vet the core agent, but not the evolving constellation of tools and plugins it depends on. From your perimeter’s perspective, you just see a single “agent” user agent string, while the real risk is buried in its internal supply chain.

5. ASI05 – Unexpected Code Execution (RCE)

Many agents can write and run code. Prompt injection, unsafe “vibe coding” loops, or poisoned packages can turn innocent-looking requests into remote code execution inside the agent’s environment, and from there into your infrastructure if isolation is weak.

Why it matters to you: This is the bridge from “weird AI behavior” to classic high-severity compromise. When an agent can run shell commands, install packages, or invoke interpreters without strong sandboxing, every prompt is one step away from an RCE attempt.

6. ASI06 – Memory and Context Poisoning

Agents store and reuse context: summaries, embeddings, system notes, RAG indexes. Attackers seed those stores with malicious or misleading entries so that future decisions are made on poisoned “facts.” The agent’s behavior drifts gradually rather than in one obvious step.

Why it matters to you: Unlike a single bad response, poisoned memory persists across sessions and users. That matters when organizations start to trust “what the agent remembers” for pricing, policy, or security decisions.

7. ASI07 – Insecure Inter-Agent Communication

In multi-agent systems, agents coordinate via message buses, APIs, A2A protocols, or shared memory. If those channels are not authenticated, encrypted, and semantically validated, attackers can spoof or tamper with messages, replay instructions, or insert rogue agents into the mesh.

Why it matters to you: Multi-agent workflows blur lines of responsibility. If a rogue or compromised agent can inject itself into internal coordination, you may see legitimate credentials and tools being used in patterns that are clearly not business-aligned.

8. ASI08 – Cascading Failures

A single poisoned tool, memory entry, or misconfigured policy can ripple through a network of agents, amplifying into widespread outages or data loss. Autonomy lets a local mistake propagate much faster than human oversight can catch it.

Why it matters to you: The operational risk is not just “one bad decision.” It is the feedback loop: agents consuming each other’s outputs, reusing corrupted state, and systematically steering systems into unsafe configurations or financial exposure.

9. ASI09 – Human–Agent Trust Exploitation

Agents sound authoritative. They write clean explanations, show polished previews, and mirror human tone. Attackers (or misaligned designs) exploit that trust to socially engineer humans into approving harmful actions, sharing credentials, or bypassing normal checks, with the agent effectively laundering the malicious intent.

Why it matters to you: Many of the most damaging incidents in the Top 10 involve a human “clicking OK” on something that looks reasonable in the moment. The audit trail shows a human approval, yet the real origin was a manipulated agent.

10. ASI10 – Rogue Agents

Sometimes the problem is not a single prompt or tool, but the agent itself. A rogue agent is one whose behavior has drifted from its design intent: it pursues hidden goals, self-replicates, hijacks workflows, or games its reward signals in ways that harm the organization.

Why it matters to you: Rogue agents are the agentic equivalent of insider threats. Their actions often look legitimate in isolation. The only way to catch them is to monitor behavior over time: what they access, where they send data, which tools they invoke, and how that differs from a stable baseline.

What if the agents aren’t yours?

If your business operates consumer apps, ecommerce sites, banking portals, adtech, or other internet-facing properties, you already see non-human traffic from AI agents, LLM crawlers, and automated browsers. Some are benign (assistants doing price comparison, copilots booking travel); some are adversarial (scrapers, account-stuffing tools, automated checkout bots). Increasingly, those non-human visitors will be agentic.

In that world, OWASP’s Top 10 raises a different set of questions for defenders:

- Can you tell whether an inbound “user” is a human, a simple script, or a full agent orchestrating tools, memory, and identities behind the scenes?

- Can you detect when a previously benign agent has become compromised, goal-hijacked, or rogue based on how it moves through your application?

- Can you differentiate legitimate agentic commerce (for example, a trusted shopping assistant) from attempts to exploit pricing, scrape inventory, or launder fraudulent transactions?

- Do you have a way to block or sandbox agents that exhibit ASI-style behaviors (tool misuse, privilege probing, cascading retries), even if the agent was never built with your security posture in mind?

The Top 10 is aimed at builders, but the consequences land on everyone. If the ecosystem is full of over-privileged, supply-chain-compromised, or memory-poisoned agents, your properties will be on the receiving end of their mistakes and compromises whether you adopt agents internally or not.

That’s why visibility and control over all non-human traffic, including Agentic AI, is foundational.

How HUMAN and AgenticTrust fit into the OWASP picture

HUMAN has spent more than a decade distinguishing good automation from bad: separating helpful bots from fraud bots, search crawlers from credential-stuffing tools, and now legitimate AI agents from adversarial or compromised ones.

Agentic AI raises the bar. Traditional bot defenses focused on static scripts and narrow automation. OWASP’s new Top 10 assumes something more dangerous: autonomous systems that can plan, remember, choose tools, and adapt in real time.

This list underscores how rapidly autonomy is reshaping the threat landscape. From Agent Goal Hijack (ASI01) to Identity and Privilege Abuse (ASI03), and from Human-Agent Trust Exploitation (ASI09) to fully Rogue Agents (ASI10), the risks are no longer theoretical, they’re active, systemic, and already hitting production systems. At

HUMAN Security, we see this shift every day across AI agents, LLM crawlers, and automated browsers. AgenticTrust was purpose-built for this era: to verify every agentic interaction, detect intent in real time, and ensure businesses can safely adopt beneficial agent traffic while blocking the adversarial, deceptive, or compromised behaviors highlighted throughout the OWASP guidance.