When a new website goes live, it’s not people who visit first—it’s bots. Automated tools probe new domains within minutes, long before any customer or legitimate user arrives.

These bots vary widely in intent: some are benign (search engine crawlers indexing your pages, or commercial security scanners checking for vulnerabilities with permission), but many are malicious. Among the most pernicious are web scanner bots, which quickly examine websites for weaknesses and exploit them, turning reconnaissance and attack into a single automated sequence, carried out at scale.

HUMAN’s Satori Threat Intelligence team monitors bot activity across our customer network and in dedicated research environments. One such environment is a honeypot: a web server we intentionally set up to attract only bot traffic. By observing the requests hitting this fresh, otherwise unpublicized website, we are able to gain insight into the types of bots that target a website from its inception and onwards. One early finding in this experiment was that web scanners consistently dominate early traffic to new sites, and continue to probe the sites day after day, long after other bot types began to appear. This persistence underscores why scanner activity is a security concern for the full life span of any web property.

This blog post examines the threat of these web scanner bots and shares our recent research findings on several active scanner campaigns (including the Mozi.a botnet, a Mirai-variant called Jaws, and the “Romanian Distillery” scanner). We’ll explain what web scanners are, how they operate as part of larger botnets, and why they’re a serious risk. We’ll also discuss the implications for organizations and how advanced defenses can mitigate these threats.

Executive Summary

What are Web Scanners? Threat Actor’s ‘Bot Scouts’

Web scanners, also known as website vulnerability scanners, are automated tools designed to identify security weaknesses in web applications, websites, and APIs. They systematically probe and inspect websites for misconfigurations, exposed files, default credentials, or known vulnerable software. If a new website is still being configured or lacks proper protections, scanners will attempt to find and exploit any flaw they can before the site’s owners have a chance to secure it.

Not all scanners are bad, though. There are “good” scanners run by security companies or researchers to help site owners by identifying vulnerabilities so they can be fixed, before those “bad” scanners get to them. But both good and bad scanners impose load on your site, and the bad ones, if not blocked, will certainly attempt to leverage any weakness they find. Scanners don’t just visit once; they constantly and persistently re-scan sites over time (since new vulnerabilities might appear with site updates or as new exploits are discovered).

Quantifying Web Scanner Traffic to New Websites

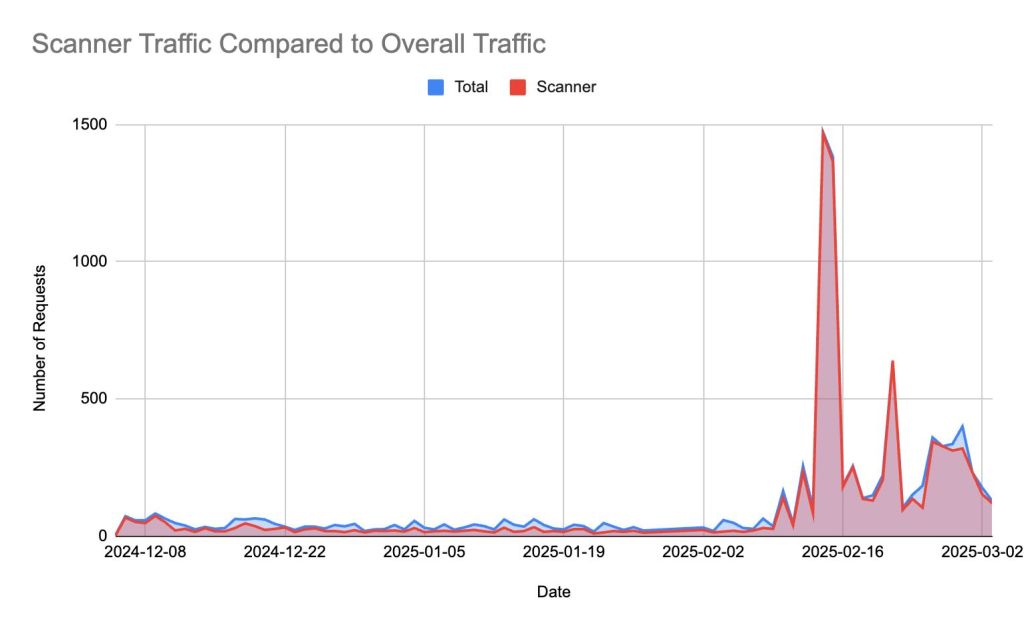

Let’s look at traffic to our honeypot site in the months following its initial launch.

Figure 1 illustrates the dominance of scanner traffic within the overall bot traffic visiting the website, particularly in the period immediately following its launch. The chart highlights that, on average, 69.5% of bot traffic originates from scanners. Notably, on certain days, scanner traffic accounts for over 90% of bot activity, occasionally reaching 100%, meaning that all detected bot traffic on those days was exclusively from scanners. Figure 2 underscores the overwhelming presence of scanner traffic compared to other bot categories (such as crawlers and scrapers), reinforcing its critical role in early-stage website activity.

How Web Scanners Find New Targets

To ensure that their scanners manage to get first in line for any new website, threat actors take advantage of feeds and services that announce new websites or domains coming online. For example, threat actors monitor Newly Registered Domain (NRD) feeds, which are lists of recently registered, updated, or dropped domains, such as WHOIS databases and domain drop lists. Such NRD feeds are re-purposed from policy feeds intended to increase corporate security to threat intelligence and monitoring feeds against the websites themselves.

Threat actors also monitor certificate transparency logs, such as CertStream, which publicly log new SSL/TSL certificates. For scanners, a new domain registration or certificate issuance indicates a new website that could be scanned. Once the large-scale SEO crawlers index the new website, scanners may also monitor the search engines’ new listings, and the scale of scanners will increase even further.

Identifying Web Scanner Bot Traffic

Some scanners openly identify themselves in the user-agent string (the part of an HTTP request that might say, for example, “Mozilla/5.0 (compatible; ScannerXYZ/1.0; …)”). Security teams can easily filter or block those known scanners.

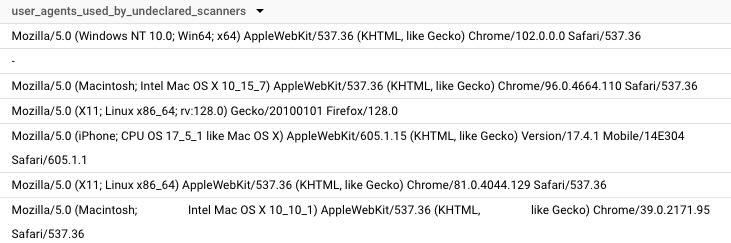

But many malicious scanners deliberately mask their identity or use misleading user agents to obfuscate their true nature. In these cases, identifying them requires analyzing their behavior and deploying antibot mechanisms to intercept their activity. The figure below highlights 7 out of 13 different types of scanners we uncovered, which collectively made hundreds of requests over time while disguising themselves behind commonly used or even absent user agents.

Some other user agents we observed suggest the presence of outdated or anachronistic systems including BeOS, legacy Linux kernels, and even Windows 3.1 Internet Explorer versions. Obviously, no real users are surfing the web on Windows 3.1 today, so this was a dead giveaway of automated activity. These impossibly old user agents likely came from a public user-agent database that the attackers grabbed for obfuscation purposes. A fun and benign find that should never hit any of your web servers if you have a decent bot mitigation solution deployed. Some examples can be found in figure 4, with user-agents going back decades.

Reconnaissance and Probing the Target

Before scanners are even deployed, operators conduct reconnaissance to identify likely entry points—directories, configuration files, endpoints, and services that might exist across newly launched sites. They craft scanners with predefined paths and exploitation logic, tuned to probe and attack if those elements are present.

Once launched against a site, the scanner rapidly tests for these known targets. It attempts to enumerate directories, pages, API endpoints, and exposed resources, executing preset payloads or exploits where applicable.

One of the most common methods to launch scanners is by using ‘Dirbust’, a dictionary-based attack against web servers that automates the process of discovering hidden files and directories on a website. This tool scans through predefined lists of potential directory and file names (e.g. /admin/, /config.php, /backup.zip, etc.) in hopes of getting lucky in finding unprotected sensitive files or admin interfaces.

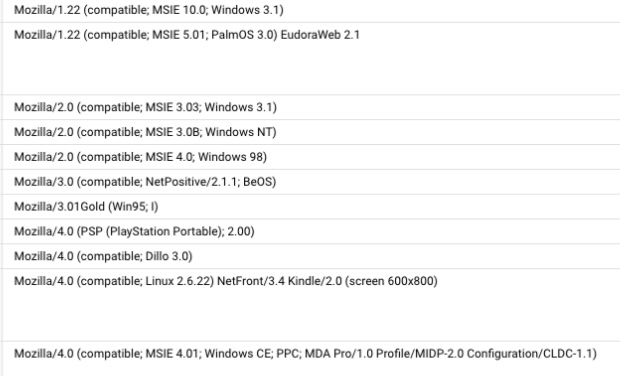

Using the scanner traffic from our research, we mapped the most targeted path types targeted by scanners. This mapping shows that scanners have particular favorites when it comes to these initial probes. The two most targeted types of files by far were environment configuration (.env) files and repositories for code secrets. In fact, about one-third of all scanning attempts in our study were after .env files, and another one-third were looking for Git repository data (such as .git folders or leftover export files). This is no surprise, environment (.env) files often contain API keys, database passwords, and other secrets that would be a jackpot for an attacker, and Git-related files might expose source code or credentials that enable a deeper compromise. The potential exploitation from each of these path types is listed in the table below and the most targeted paths are represented in figure 5.

| Path Type | Explanation |

| .env files | Stores API secrets, tokens, and other sensitive information that can be used by attackers to pivot and attack. |

| Git secrets | Used to gain access to victims’ repositories, leading to cross-organisation compromise. |

| Common PHP | Useful when doing recon, identifying the versions of PHP’s or other installed frameworks. |

| Unprotected config | Config files that can contain any kind of sensitive information, from users, passwords, and environment variables that can be used in follow-up attacks. |

| YML files | Same as .env files, contains API secrets, users, tokens, and other sensitive information that an attacker can use to pivot. |

| Python mail sender | In poorly configured web servers, attackers can gain access to the credentials used to send mail en masse. |

| Exchange server | Vulnerable Exchange servers that can lead to further compromise of the organization and facilitate attacker lateral movement |

| Server status | Discloses paths, user agents, and IPs visited by everyone on the server. Insecure web apps might disclose various PII (through their path) and also provide attackers precious data to do additional recon, such as internal API endpoints. |

| Laravel | Popular PHP framework with various RCEs that can be further exploited by attackers. Attackers fingerprint it by scanning for unsecured default endpoints that provide version info. |

| WordPress | Popular PHP CMS with a history of vulnerabilities. Attackers try to find fresh versions that are still using default credentials or endpoints that provide additional fingerprinting, such as plugin versions. These are further scanned for vulnerabilities and exploited if found lacking the latest security patches. |

Beyond those, scanners also commonly seek out various configuration and backup files (for example, .yml or .yaml configs, old .bak or .zip backups of the site, or files like config.php that might reveal database connection info). They probe for known software-specific files: for instance, requesting a URL ending in wp-config.php could indicate the site uses WordPress and reveal its config if not secured, or hitting /server-status on a web server could reveal internal information if that page isn’t locked down. Scanners will even check for well-known vulnerable services – one example is scanning for Outlook Web Access/Exchange server paths on sites, since unpatched Exchange servers are high-value targets that could lead to a broader organizational breach.

Essentially, during the probing phase, scanner bots test the site against a predefined list of files, directories, and endpoints that should not be exposed. Every directory listing, config file, or version disclosure it finds is “loot” that can be used in the next phase of the attack. This process is highly automated and aggressive: the scanner might attempt hundreds of different URLs on your site in rapid succession, far more exhaustive and faster than any human could manage. That behavior pattern is often a telltale sign that the traffic is a scanner bot.

How Web Scanners Exploit Vulnerabilities

When a scanner bot hits a valid target, it immediately attempts exploitation using predefined payloads. The discovered paths allow attackers to fingerprint underlying technologies like CMS platforms, web frameworks, and third-party components with known weaknesses. They may attempt common attacks, including SQL Injection (SQLi), Cross-Site Scripting (XSS), Cross-Site Request Forgery (CSRF), broken authentication, security misconfigurations, Insecure Direct Object References (IDOR), Server-Side Request Forgery (SSRF), and exploits for outdated software with known CVEs.

Below are a few real examples of exploits our team observed scanner bots attempting:

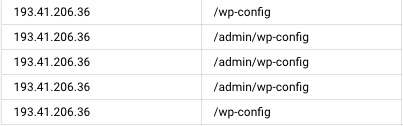

Wordpress Hijacking

One common target is WordPress, a popular CMS. Scanner bots will look for WordPress configuration or admin pages and attempt to use default or leaked credentials. In our research, we saw a scanner repeatedly requesting the URL path /admin/wp-config, likely hoping to find a WordPress config file or admin panel left accessible (see figure 6). By hitting it multiple times over weeks, the bot was checking if at some point it became exposed. If such a scanner finds a WordPress site still using the default admin password, the attacker can log in as admin, effectively seizing control of the website. From there they could deface the site, steal user data, or install malware (for instance, injecting malicious code to deliver to anyone who visits the site).

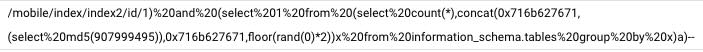

SQL Injection

Another exploitation method is to use text forms or URL parameters for SQL injection (SQLi) vulnerabilities, which allow attackers to manipulate database queries by injecting malicious SQL code, potentially leading to data theft, modification, or system compromise. One such exploitation attempt seen in our research was using the following obfuscated query to the ‘mobile/index/index2/id/1’ path.

This looks like junk text, but as we can see in the decoded query below, it is actually designed to trick the database into revealing information (the payload attempted to use the database’s information_schema to count tables and concatenate a known value, which is a common SQLi trick to test if the attack is working).

In essence, the scanner was asking, “Is this website vulnerable to SQL injection? If so, return the specific query results I’m requesting so I can confirm the flaw and gather additional insights for a deeper attack.” Had the site been vulnerable, the attackers would have followed up with additional queries to extract all data from the database and potentially execute commands to compromise the system.

Decoded Query:

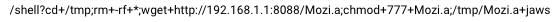

Botnet Infection

Scanners may also exploit the server by infecting it with different types of malware. The Mozi.a botnet, an IoT-focused botnet known in the security community, has been observed focusing on exfiltrating sensitive information from environment files (env files), which often contain database passwords, API keys, and other critical credentials. This scanner operates stealthily, often without declaring a user agent, making it more difficult to detect using traditional security tools.

In one case, we identified its attempt to infect the server with an offshoot of the Mirai botnet called Jaws. This specific sample tries to infect the devices inside the target’s home network (notice the private IP used as C2).

Each of these scenarios demonstrates why web scanner bots are a serious threat. They are not benign observers; they are often the first wave of an attack. A scanner that finds and exploits a vulnerability may establish a foothold, trigger repeat exploitation, or pave the way for more severe follow-on attacks.

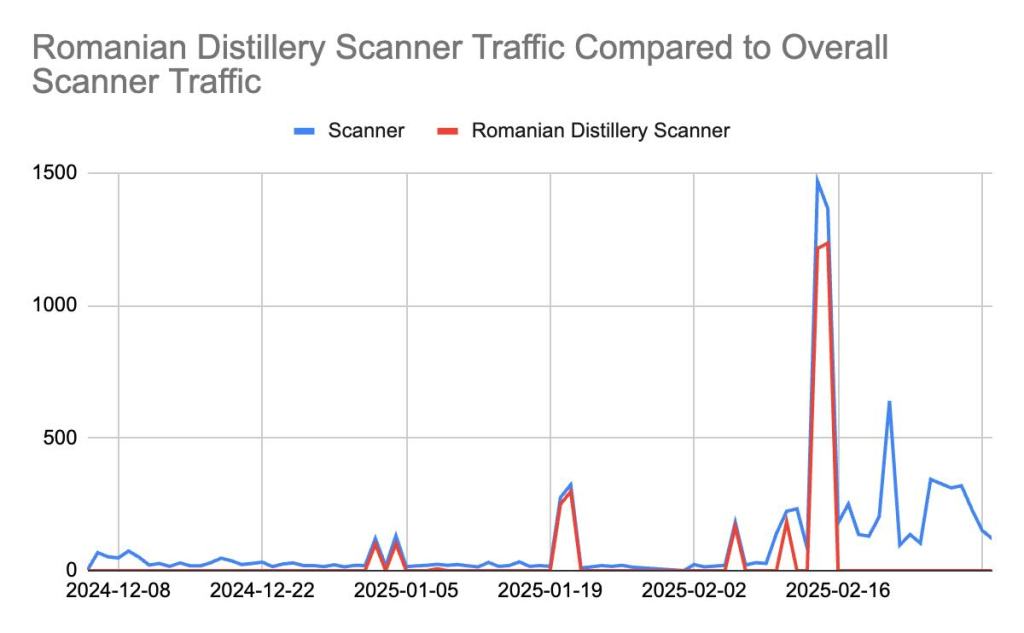

Case Study: The ‘Romanian Distillery’ Operation Scanning for SMTP Credentials Scanner

One of the most active scanner bot operations we’ve tracked in our honeypot operation is nicknamed the “Romanian Distillery” scanner. The moniker comes from the fact that the activity originates from IP addresses registered to a distillery in Romania, indicating the attackers likely compromised that organization’s network and are using it as a base of operations. This scanner was publicly reported by researchers in early March 2025 as a bot focusing on stealing SMTP (email server) credentials. However, Satori’s threat intel data reveals that this operation is broader and started earlier than initially thought.

As illustrated in figure 9, our honeypot recorded requests from the IP 193[.]41[.]206[.]202 (the primary address associated with this scanner) as early as January 2, 2025, about a month before other reports had noted its appearance.

Since then, its scanning behavior has followed an interesting pattern: it would hit our server, then disappear, and then return after a growing interval. Specifically, its visitation gaps doubled each time—first 2 days apart, then 4 days, then 8, 16, and so on. With each return, the scanner generated a higher and higher volume of requests, ultimately reaching thousands over time. Throughout its activity, the scanner did not identify itself in its user agent, instead using a simple “-“, a common trait of traditional scanners.

![Figure 9: The traffic coming from the IP 193[.]41[.]206[.]202, attributed to the Romanian Distillery Scanner.

Tjhe traffic starts 1/5/2025, spikes in mid-January, then spikes significatly again early February.](https://www.humansecurity.com/wp-content/uploads/2025/06/romanian-distillery-scanner-persistent-traffic.jpg?w=1024)

A Distributed Operation

Moreover, in examining the scanner’s operation, we identified that the scanner doesn’t operate solely using the IP mentioned above, but rather using the whole 193[.]41[.]206[.]0/24 IP range (256 addresses). Figure 10 illustrates the traffic volume coming from this subnet over time, with a dramatic spike in scanning activity around February 12, 2025.

IPs across this range exhibited highly coordinated behavior. Our data shows that 53.4% of the observed indicators of compromise (IoCs)—including scanned paths and behavioral signatures—were shared across multiple IPs, while each address also targeted some unique paths. This points to a deliberate effort to diversify reconnaissance activity and avoid redundant coverage. In effect, the IPs complemented each other, creating a wide-reaching scan operation capable of identifying a broader range of vulnerabilities across the internet.

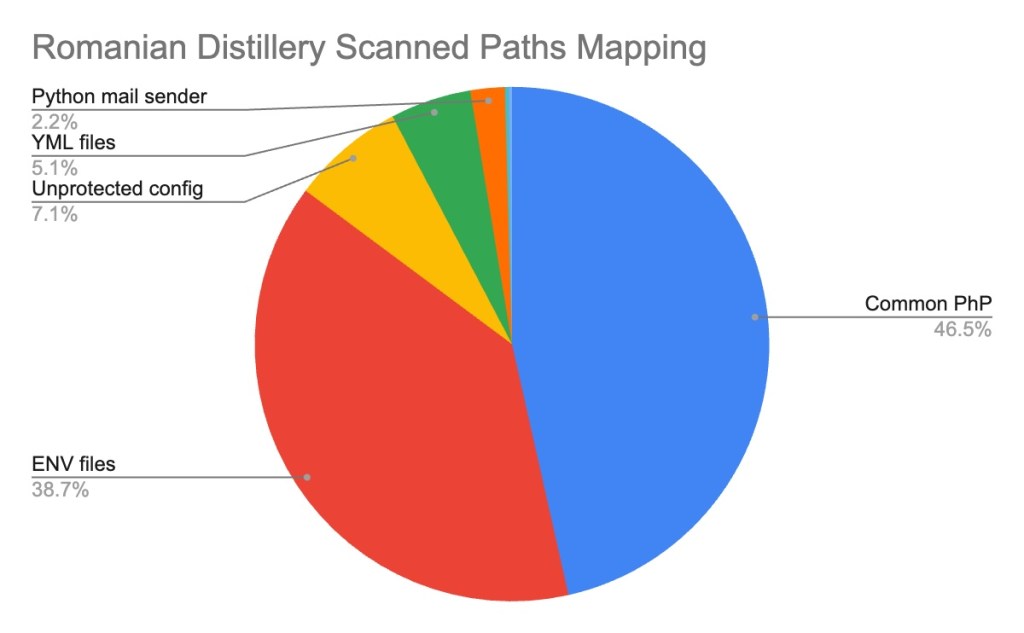

Initial reports painted this scanner as solely interested in SMTP credentials. And while this operation did target things like mail server config files and credentials, our investigation revealed that the operation cast a much wider net.

A mapping of the paths attempted by this scanner (figure 11) revealed that PHP services accounted for approximately 46.5% of its targeted paths, and .env files made up approximately 39%. Paths related to email or SMTP were a much smaller fraction of the total. So, the “Romanian Distillery Scanner” is not just a simple email harvester; it’s hunting for sensitive information, database credentials, and exposed keys on a wide scale, and it’s far more dangerous than previously thought. Because of its wide scanning operation exemplified above, “Compromised Romanian Distillery Botnet” might be a more suitable name for this operation.

Protection and Mitigation

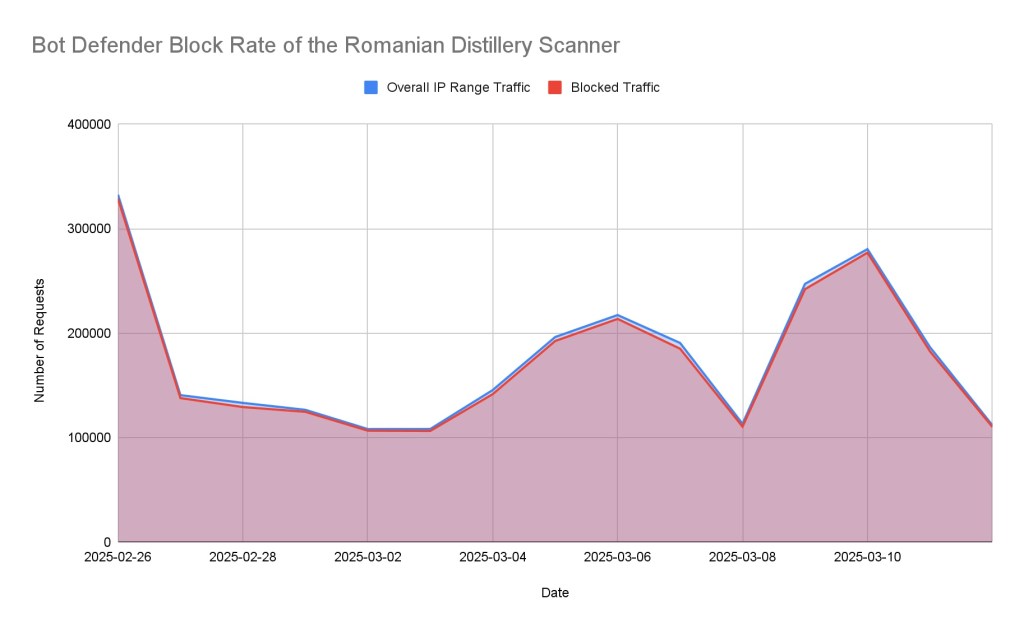

This case also serves as a success story for advanced bot mitigation. HUMAN cyberfraud defense detected and blocked the majority of this scanner’s traffic, even prior to any public threat intel or signatures for the scanner being available. As illustrated in Figure 12, 98% of all traffic from the Romanian Distillery Operation was blocked by HUMAN.

To achieve this, HUMAN did not rely on specific indicators, such as a known bad IP or unique user-agent, instead, it used complex volumetric rules that identify bot behavior, limiting attackers’ ability to escape detection by changing their IoCs.

Web Scanners as a Persistent Threat

Web scanner bots have long been a staple of the cybercriminal toolkit, quietly mapping exposed infrastructure and probing for weak points across the internet. While not new, their use in large-scale, automated operations continues to grow in scope and impact.

Our research shows that these scanners function as an early strike mechanism for attackers, probing for weaknesses and exploiting them automatically. They identify misconfigurations, secrets, and vulnerable services before defenders can respond. Their persistent presence means that every new web property is a potential target from day one.

Traditional defenses often miss these threats, especially when scanners rotate IPs, spoof user agents, or blend in with legitimate traffic. Effective protection depends on visibility into bot behavior and adaptive defenses that can detect malicious patterns without relying on known signatures.

For security and business leaders, recognizing scanner traffic as more than just background noise is key. Treating it as a signal, and managing it proactively, can reduce exposure and help prevent more serious downstream compromises.

To stay ahead of these threats, explore more of Satori’s threat research and insights.