Anytime we hear the words “AI” and “malware” in the same sentence, defenders immediately wonder if this might finally be the AI-generated threat capable of bypassing even our strongest defenses. A lot of the conversation surrounding AI and malware highlights worst-case scenarios for defenders, such as the use of agentic AI to solve CAPTCHAs, create malicious tools, or even conduct automated cyberattacks. But not every instance of the use of AI in malware is a doomsday scenario.

Take, for example, AkiraBot, a newly discovered bot that leverages AI–specifically large language models (LLMs)–to mass-generate spam messages in contact forms and website chats to promote a Search Engine Optimization (SEO) service. While concerning, AkiraBot isn’t the AI-driven cybersecurity nightmare you might fear. In this post, I’ll give a broad overview of AkiraBot, break down how HUMAN Security protects against the tactics, techniques, and procedures (TTPs) used by AkiraBot, and provide some context on how it fits within the larger AI malware landscape and the threat it poses for the defensive community.

What Is AkiraBot?

AkiraBot is a Python bot framework that uses OpenAI to generate custom spam messages to post to contact forms and chat widgets. These messages promote what the researchers describe as a low-quality SEO service; the purpose of using LLM-generated content is to ensure the messages aren’t caught in spam filters. The Satori Threat Intelligence Team briefly analyzed AkiraBot to understand its functionality and how it implemented its core features. Ultimately, we determined that the bot itself is not as sophisticated as many of the ones Satori regularly encounters, but there are a few aspects of it that I wanted to highlight:

- Use of Known Clients and Frameworks. AkiraBot makes use of well-known HTTP clients and frameworks in its current implementation, including AIOHTTP, HTTPX, and BeautifulSoup4. Because other bots and applications use these tools in their implementation and AkiraBot does not customize these tools, we know how to detect them.

- Use of Default Settings. While the bot uses the browser automation tool Selenium to simulate a real person browsing the internet, it initializes it using default settings. What this means is that AkiraBot is not actively attempting to obfuscate its use of Selenium, so detecting its use based on known artifacts is feasible. In addition, AkiraBot is only using Selenium to spam the Reamaze service, which is a service that provides customer support chat integrations; it is not using Selenium for HTTP requests. While HUMAN’s tools can detect Selenium, because AkiraBot uses it only against one service, this reduces the complexity of detection further.

- Minimal Use of Custom Headers. Finally, AkiraBot uses generic headers, including user-agent (UA) strings, for the majority of its communication. The only time we observed the use of custom headers (including the UA) was when the bot posted AI-generated text content to the victim websites. As with the use of known clients and frameworks and the use of default settings, this helps simplify the detection of AkiraBot, in conjunction with other malicious indicators.

Like many threat actors who deploy malicious tools, the ones behind AkiraBot use residential proxy services to obfuscate its use by making it look like the malicious traffic is coming from residential IPs versus those defenders can more easily designate as malicious. Residential proxies are a persistent challenge for defenders, and detecting their use in cyberattacks is a difficult problem that HUMAN is focused on defeating.

Detecting AkiraBot with HUMAN Security

HUMAN Security’s Satori Threat Intelligence Team works to stay ahead of the latest bot-based threats to our clients and products. HUMAN combats bot threats like AkiraBot in the following ways:

- Detection through behavioral fingerprinting, specifically identifying subtle anomalies in behavior that would flag a user as a bot versus a real person

- Real-time fingerprinting of browser activity to identify mismatches in network-layer fingerprints

- Analysis of on-page behavior, such as mouse movements and keystrokes

- Use of IP reputation and session clustering

- JavaScript-based submit protection and challenge enforcement

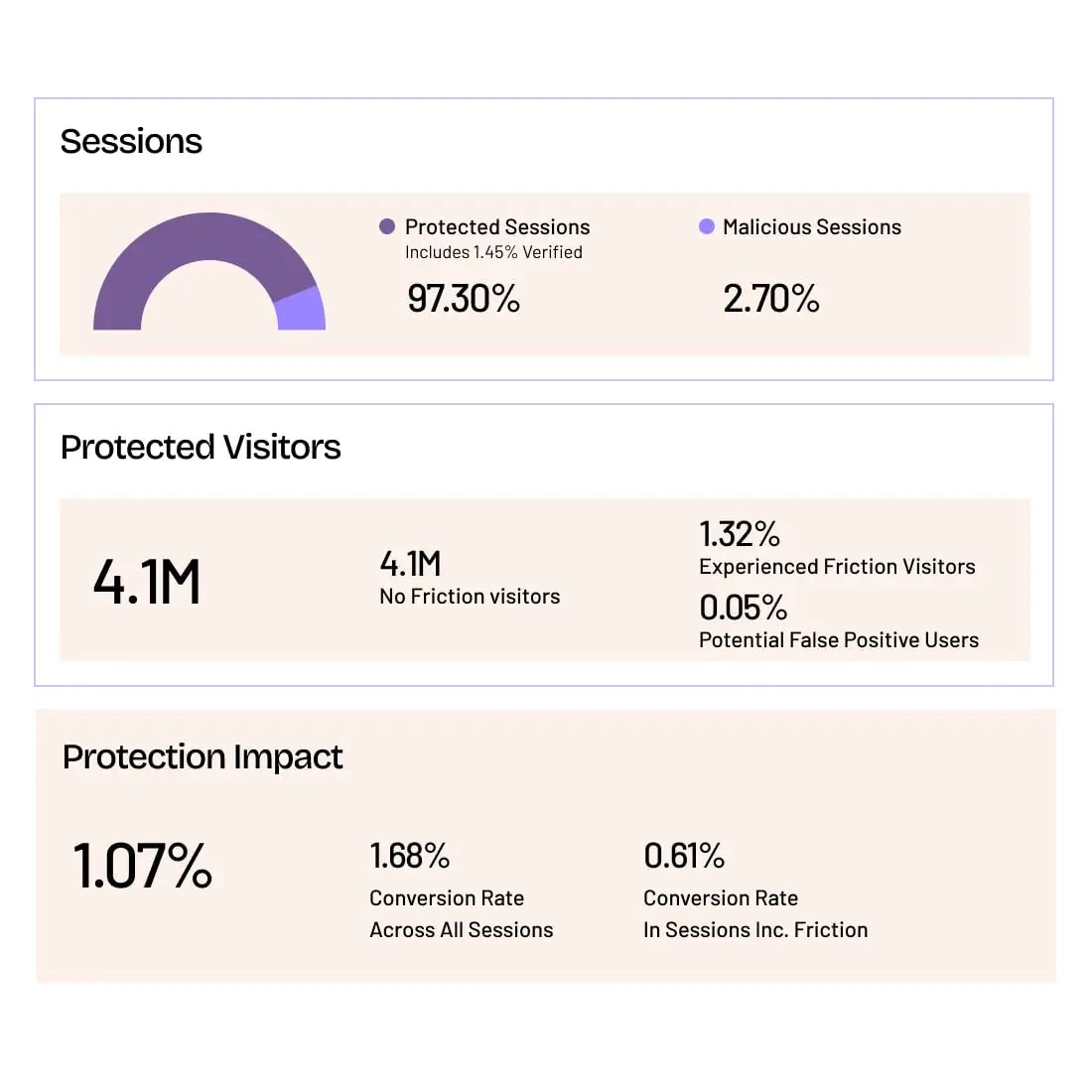

HUMAN’s industry-leading detection models and proprietary features (including HUMAN Sightline, Human Challenge, and Precheck) minimize friction and enable granular visibility in the AI era. Our outcome-centric, customizable cyber fraud mitigation solution spans the entire lifecycle of bot attacks and human-led fraud — as well as robust investigative capabilities that enable a deep understanding of your unique threats.

Keeping a Realistic Perspective on AI in Cyber Threats

As noted above, much of the conversation surrounding AI in cybersecurity revolves around highly sophisticated and automated attacks, but the reality is often more nuanced. AkiraBot serves as a clear example of how AI is currently used primarily for content generation rather than for more advanced malicious tasks.

What we know about AkiraBot in its current iteration is that it makes use of commercial LLM technology in a very well-known and expected way: to generate thematically similar content that varies between each instance. As of today, we do not see any evidence that AkiraBot uses the LLM for its bot-specific functionality, including the bypassing or solving CAPTCHAs, generation of HTTP requests, or evasion of bot detection software. We also do not have any evidence that the bot’s code was generated using LLM or AI software.

While AkiraBot’s use of AI-generated spam poses a genuine threat, particularly to the small and medium businesses researchers observed the bot targeting, these threats are effectively mitigated by advanced detection capabilities already in place. HUMAN Security detects and mitigates bots such as those using the AkiraBot framework, ensuring robust defenses even as attackers explore new ways to exploit AI.

For further insights into the risks associated with AI-driven automation, you can explore our previous articles on Agentic AI and associated risks, controlling AI-driven content scraping, and how AI-driven bots could shape cyber threats in the coming years.

Grow with Confidence

HUMAN Sightline protects every touchpoint in your customer journey. Stop bots and abuse while keeping real users flowing through without added friction.