To monitor bot activity across the internet, we at White Ops have to collect, analyze, and store a tremendous amount of data — here’s how our tech stack makes it all possible.

Protecting the internet from bots isn’t easy: it takes constant vigilance, with security researchers combing through and manipulating trillions of data points in search of patterns that may indicate botnet activity. More importantly, it takes a pretty efficient and scalable tech stack to process this immense amount of data.

Some clients and partners have asked how we conduct our business without sinking every dime into cloud storage. So we figured we’d give a glimpse under the hood of our tech stack to help people understand our system for identifying and stopping bot-driven cybercrime. The technological foundation of our operations is surprisingly simple, but empowers our team to find solutions to the complex problems they face every day.

Our Approach

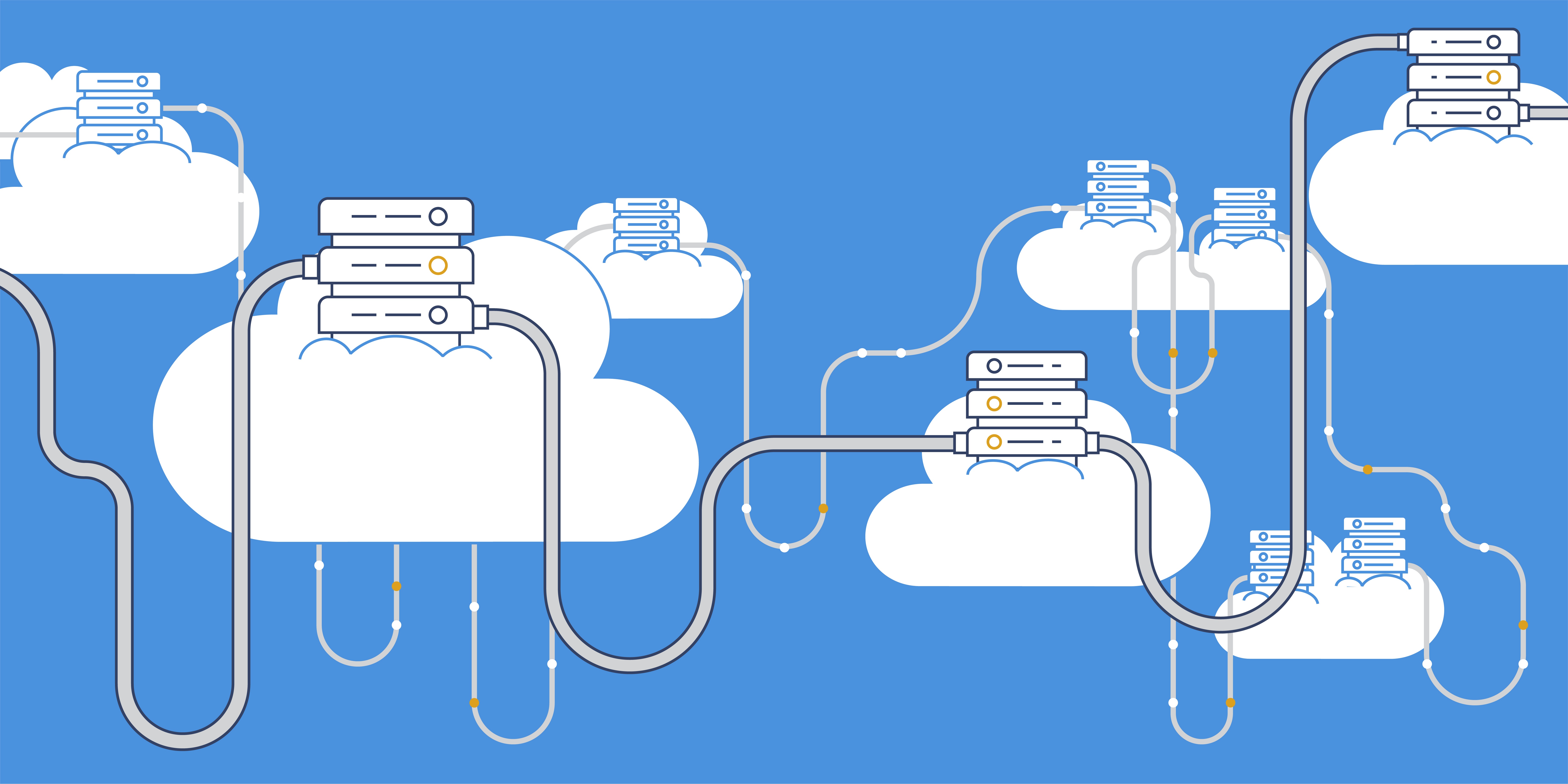

At White Ops, data from various sources and in different formats is kept in a single, highly scalable storage infrastructure. Our goal is to continually transform disparate data into a single, structured format, then report them to their appropriate destinations. Achieving that goal requires a great deal of flexibility and capacity, which we get through the use of a few critical components:

Amazon S3 (Simple Storage Service) is a web service offered by Amazon Web Services (AWS) that provides scalable storage infrastructure through interfaces like REST and SOAP. White Ops is drawn to it in particular for its ability to store massive volumes of unstructured data, comprehensive security and compliance protocols, and flexible management capabilities.

Apache Spark is a fast and general engine for large-scale data processing. Spark has an advanced directed acyclic graph (DAG) execution engine that supports acyclic data flow and in-memory computing, and combines SQL, Streaming, and complex analytics. White Ops uses Spark for its data processing needs because of its amazing flexibility: it runs on Cloud, Kubernetes, Hadoop, Mesos, Standalone, and its applications can be written in Python, Java, Scala, and R. Importantly, it can also be combined with Hive to utilize its matured SQL capability for data processing.

A serverless cloud architecture allows us to build and run applications without thinking about physical servers, which is an obvious plus for a growth-stage company like ours. This enables us to keep our costs down by scaling our use of server space to account for demand as it expands and contracts.

Our Process

We write Spark applications using Python as our coding language, adding Hive context to Spark in order to more efficiently manipulate S3 data. We chose Hive because it’s one of the most matured products available that can manipulate data in S3, and it’s much faster on Spark than it is on Hadoop.

Next, we need to spin up the resources necessary to manipulate data. Running this process in an EC2 instance would require provisioning and 24/7 runtime — a major drain on both our time and our budgets. Instead, we chose a Spark-EMR cluster and installed Hive on top of it, making our lives far easier and allowing us to run our processes with greater cost-efficiency. EMR also gives us the ability to choose the power (number of nodes) we need dynamically — that is, adjust it based on the workload we’re dealing with at any given time.

This combination has allowed us to develop our code internally, store it in an S3 bucket, start EMR clusters whenever we need, export the code base from the S3 bucket to EMR, and finally, run the code base against the S3 data that we need to manipulate. At the end of the process, our processed target result set will be stored in a new S3 bucket for us to consume. For monitoring and alerting, we can integrate Cloudwatch to send alerts to our DevOps team.

Advantages

If all that sounds relatively simple, that’s because it is. This light tech stack is all we need to execute our special approach to data processing, which affords us the following benefits:

- Scalability: A serverless architecture comes in handy when it comes to scaling, since it frees us from having to worry about provisioning.

- Reliability: Since our whole process is re-runnable, we’re better able respond to and recover from our failures.

- Performance: We can improve performance based on ROI by increasing the number of nodes in our configuration, which can ramp up the speed of execution.

- Flexibility: Using configuration files to store our programming logic helps us to be flexible with process manipulation and make adjustments to our approach based on business requirements.

Thanks to these processes and tools, we at White Ops are able to pursue our mission of protecting the internet by verifying the human user behind every online transaction. It’s both our dedication to that mission and our cutting-edge technology that makes it possible for us to stop bots faster, preventing them from preying on you and your business.